Deep Learning (03) - 신경망 학습 / MNIST

신경망 학습

MNIST와 Fashion-MNIST

In [1]:

from keras.datasets.mnist import load_data

In [2]:

(x_train, y_train), (x_test, y_test) = load_data()

Out [2]:

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/mnist.npz

11490434/11490434 [==============================] - 0s 0us/step

- 데이터 형태 확인

In [3]:

x_train.shape

Out [3]:

(60000, 28, 28)

In [4]:

x_test.shape

Out [4]:

(10000, 28, 28)

In [5]:

y_test.shape

Out [5]:

(10000,)

In [6]:

y_train[:5]

Out [6]:

array([5, 0, 4, 1, 9], dtype=uint8)

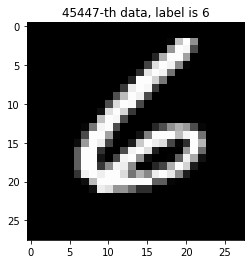

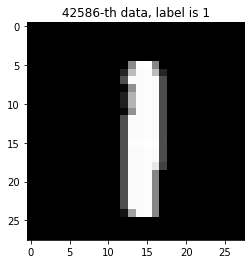

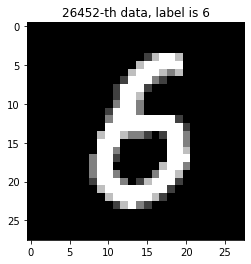

- 데이터 그려보기

In [7]:

import matplotlib.pyplot as plt

import numpy as np

In [8]:

sample_size = 3

random_idx = np.random.randint(60000, size=sample_size)

random_idx

Out [8]:

array([45447, 42586, 26452])

In [9]:

for idx in random_idx:

img = x_train[idx, :]

label = y_train[idx]

plt.figure()

plt.imshow(img, cmap='gray')

plt.title(f'{idx}-th data, label is {label}')

Out [9]:

In [10]:

x_train[0].min(), x_train[0].max()

Out [10]:

(0, 255)

- 검증 데이터 만들기

In [11]:

from sklearn.model_selection import train_test_split

In [12]:

x_train, x_val, y_train, y_val = train_test_split(x_train, y_train,

test_size=0.3, random_state=777)

print(x_train.shape, y_train.shape)

Out [12]:

(42000, 28, 28) (42000,)

- 데이터 전처리

In [13]:

x_train = (x_train.reshape((-1, 28*28)))/255 # 28*28사이즈로 펴고, 0~1 사이값으로 조정

In [14]:

x_train.min(), x_train.max()

Out [14]:

(0.0, 1.0)

In [15]:

x_val = (x_val.reshape((-1, 28*28)))/255

x_test = (x_test.reshape((-1, 28*28)))/255

- 레이블 전처리(원-핫 인코딩)

In [16]:

from keras.utils import to_categorical

In [17]:

y_train = to_categorical(y_train)

In [18]:

y_train

Out [18]:

array([[0., 0., 1., ..., 0., 0., 0.],

[0., 0., 0., ..., 1., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

...,

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.],

[0., 0., 0., ..., 0., 0., 0.]], dtype=float32)

In [19]:

y_val = to_categorical(y_val)

y_test = to_categorical(y_test)

모델 구성

In [20]:

from keras.models import Sequential

from keras.layers import Dense

In [21]:

model = Sequential()

model.add(Dense(64, activation='relu', input_shape=(784,))) # 784(입력) * 64(레이어) + (64,바이어스) # 첫 레이어는 input_shape를 지정해야 함

model.add(Dense(32, activation='relu'))

model.add(Dense(10, activation='softmax')) # 마지막 레이어는 몇개로 나갈지 지정해야 함 # softmax: 나가는 값 전체 합을 구하면 1이(100%) 됨

In [22]:

model.compile(optimizer='adam',

loss='categorical_crossentropy', # softmax -> categorical_crossentropy # 숫자 -> spas_categorical_crossentropy

metrics=['acc']) # 평가지표 정확도

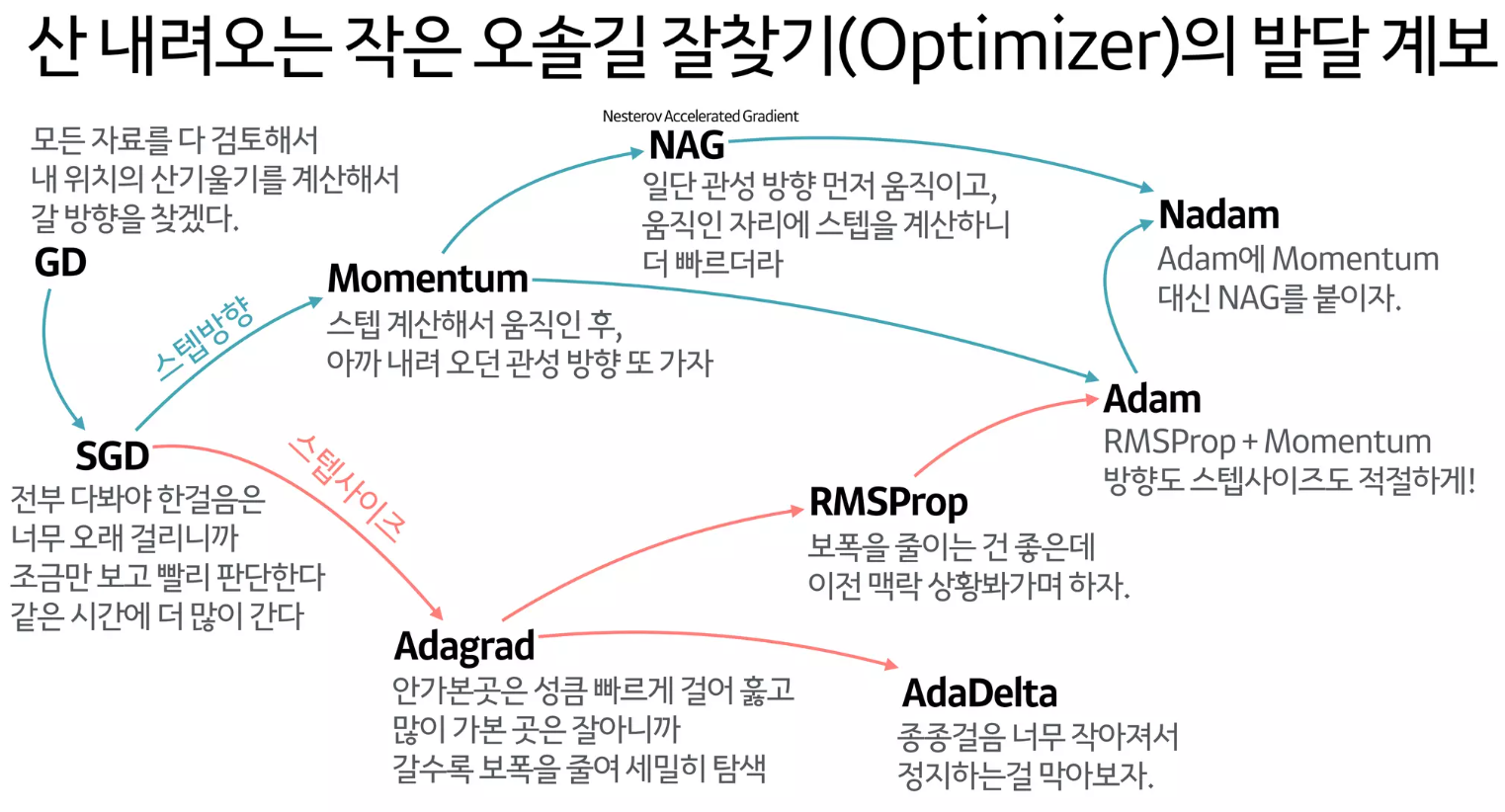

- 옵티마이져 종류

모델 학습

In [23]:

history = model.fit(x_train, y_train,

epochs = 30,

batch_size = 128,

validation_data = (x_val, y_val))

Out [23]:

Epoch 1/30

329/329 [==============================] - 2s 5ms/step - loss: 0.4993 - acc: 0.8583 - val_loss: 0.2398 - val_acc: 0.9313

Epoch 2/30

329/329 [==============================] - 1s 4ms/step - loss: 0.1984 - acc: 0.9428 - val_loss: 0.1893 - val_acc: 0.9457

Epoch 3/30

329/329 [==============================] - 2s 5ms/step - loss: 0.1509 - acc: 0.9560 - val_loss: 0.1579 - val_acc: 0.9543

Epoch 4/30

329/329 [==============================] - 1s 4ms/step - loss: 0.1250 - acc: 0.9639 - val_loss: 0.1392 - val_acc: 0.9596

Epoch 5/30

329/329 [==============================] - 1s 4ms/step - loss: 0.1040 - acc: 0.9692 - val_loss: 0.1312 - val_acc: 0.9608

Epoch 6/30

329/329 [==============================] - 1s 4ms/step - loss: 0.0888 - acc: 0.9734 - val_loss: 0.1231 - val_acc: 0.9632

Epoch 7/30

329/329 [==============================] - 1s 4ms/step - loss: 0.0773 - acc: 0.9770 - val_loss: 0.1182 - val_acc: 0.9649

Epoch 8/30

329/329 [==============================] - 1s 4ms/step - loss: 0.0665 - acc: 0.9807 - val_loss: 0.1134 - val_acc: 0.9652

Epoch 9/30

329/329 [==============================] - 1s 4ms/step - loss: 0.0598 - acc: 0.9820 - val_loss: 0.1091 - val_acc: 0.9678

Epoch 10/30

329/329 [==============================] - 1s 4ms/step - loss: 0.0517 - acc: 0.9852 - val_loss: 0.1073 - val_acc: 0.9684

Epoch 11/30

329/329 [==============================] - 1s 4ms/step - loss: 0.0461 - acc: 0.9867 - val_loss: 0.1080 - val_acc: 0.9682

Epoch 12/30

329/329 [==============================] - 1s 4ms/step - loss: 0.0404 - acc: 0.9883 - val_loss: 0.1079 - val_acc: 0.9707

Epoch 13/30

329/329 [==============================] - 1s 4ms/step - loss: 0.0353 - acc: 0.9897 - val_loss: 0.1131 - val_acc: 0.9697

Epoch 14/30

329/329 [==============================] - 1s 4ms/step - loss: 0.0310 - acc: 0.9913 - val_loss: 0.1150 - val_acc: 0.9682

Epoch 15/30

329/329 [==============================] - 1s 4ms/step - loss: 0.0272 - acc: 0.9926 - val_loss: 0.1217 - val_acc: 0.9673

Epoch 16/30

329/329 [==============================] - 1s 4ms/step - loss: 0.0247 - acc: 0.9928 - val_loss: 0.1161 - val_acc: 0.9692

Epoch 17/30

329/329 [==============================] - 1s 4ms/step - loss: 0.0207 - acc: 0.9942 - val_loss: 0.1130 - val_acc: 0.9713

Epoch 18/30

329/329 [==============================] - 1s 4ms/step - loss: 0.0181 - acc: 0.9955 - val_loss: 0.1214 - val_acc: 0.9699

Epoch 19/30

329/329 [==============================] - 1s 4ms/step - loss: 0.0164 - acc: 0.9957 - val_loss: 0.1260 - val_acc: 0.9692

Epoch 20/30

329/329 [==============================] - 1s 4ms/step - loss: 0.0147 - acc: 0.9956 - val_loss: 0.1243 - val_acc: 0.9693

Epoch 21/30

329/329 [==============================] - 1s 4ms/step - loss: 0.0143 - acc: 0.9963 - val_loss: 0.1298 - val_acc: 0.9694

Epoch 22/30

329/329 [==============================] - 1s 4ms/step - loss: 0.0121 - acc: 0.9969 - val_loss: 0.1287 - val_acc: 0.9684

Epoch 23/30

329/329 [==============================] - 1s 4ms/step - loss: 0.0105 - acc: 0.9974 - val_loss: 0.1391 - val_acc: 0.9677

Epoch 24/30

329/329 [==============================] - 1s 4ms/step - loss: 0.0094 - acc: 0.9979 - val_loss: 0.1313 - val_acc: 0.9693

Epoch 25/30

329/329 [==============================] - 1s 4ms/step - loss: 0.0075 - acc: 0.9985 - val_loss: 0.1358 - val_acc: 0.9697

Epoch 26/30

329/329 [==============================] - 1s 4ms/step - loss: 0.0060 - acc: 0.9989 - val_loss: 0.1398 - val_acc: 0.9701

Epoch 27/30

329/329 [==============================] - 1s 4ms/step - loss: 0.0089 - acc: 0.9976 - val_loss: 0.1515 - val_acc: 0.9678

Epoch 28/30

329/329 [==============================] - 1s 4ms/step - loss: 0.0154 - acc: 0.9948 - val_loss: 0.1546 - val_acc: 0.9681

Epoch 29/30

329/329 [==============================] - 1s 4ms/step - loss: 0.0051 - acc: 0.9990 - val_loss: 0.1432 - val_acc: 0.9706

Epoch 30/30

329/329 [==============================] - 1s 4ms/step - loss: 0.0030 - acc: 0.9995 - val_loss: 0.1517 - val_acc: 0.9699

- history에 저장되는 값

In [24]:

history.history.keys()

Out [24]:

dict_keys(['loss', 'acc', 'val_loss', 'val_acc'])

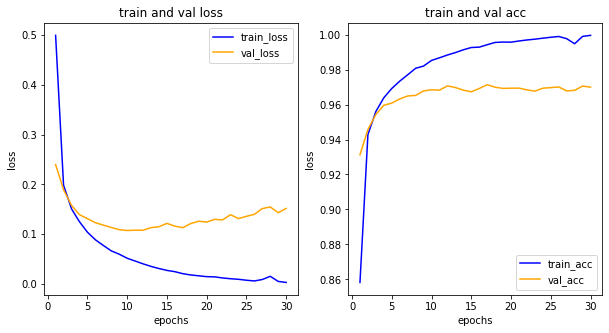

- 학습 결과 그려보기

In [25]:

import matplotlib.pyplot as plt

In [26]:

his_dict = history.history

loss = his_dict['loss']

val_loss = his_dict['val_loss']

epochs = range(1, len(loss)+1)

fig = plt.figure(figsize=(10, 5))

ax1 = fig.add_subplot(1, 2, 1) # 1행 2열로 첫번째 plot

ax1.plot(epochs, loss, color='blue', label='train_loss')

ax1.plot(epochs, val_loss, color='orange', label='val_loss')

ax1.set_title('train and val loss')

ax1.set_xlabel('epochs')

ax1.set_ylabel('loss')

ax1.legend()

acc = his_dict['acc']

val_acc = his_dict['val_acc']

ax2 = fig.add_subplot(1, 2, 2) # 1행 2열로 첫번째 plot

ax2.plot(epochs, acc, color='blue', label='train_acc')

ax2.plot(epochs, val_acc, color='orange', label='val_acc')

ax2.set_title('train and val acc')

ax2.set_xlabel('epochs')

ax2.set_ylabel('loss')

ax2.legend()

Out [26]:

<matplotlib.legend.Legend at 0x7f0683e82130>

오버핏(과대적합) 발생 지점 확인 가능!

예측, 정답 비교

In [27]:

model.evaluate(x_test, y_test)

Out [27]:

313/313 [==============================] - 1s 2ms/step - loss: 0.1398 - acc: 0.9708

[0.13981212675571442, 0.97079998254776]

In [28]:

results = model.predict(x_test)

Out [28]:

313/313 [==============================] - 1s 1ms/step

In [29]:

results.shape

Out [29]:

(10000, 10)

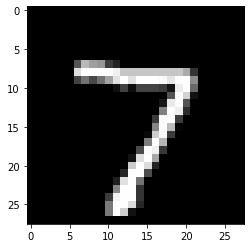

In [30]:

np.round(results[0], 4)

Out [30]:

array([0., 0., 0., 0., 0., 0., 0., 1., 0., 0.], dtype=float32)

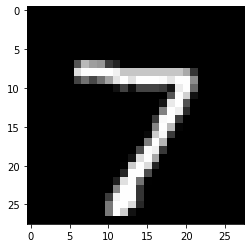

In [31]:

np.argmax(results[0])

Out [31]:

7

In [32]:

np.argmax(y_test[0])

Out [32]:

7

In [33]:

plt.imshow(x_test[0].reshape(28, 28), cmap='gray')

Out [33]:

<matplotlib.image.AxesImage at 0x7f0683dced30>

In [34]:

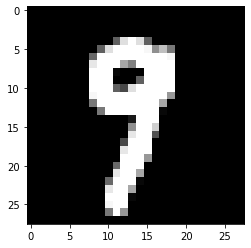

import glob

from PIL import Image

In [35]:

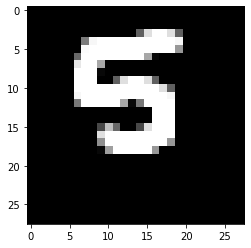

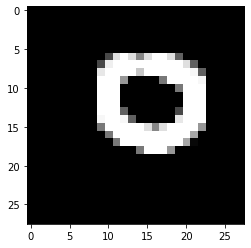

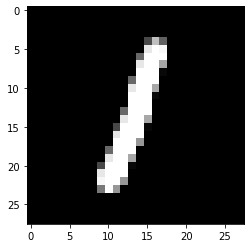

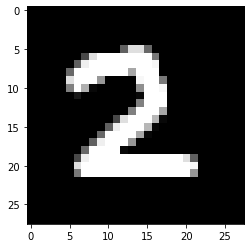

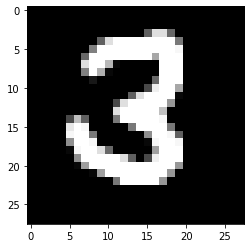

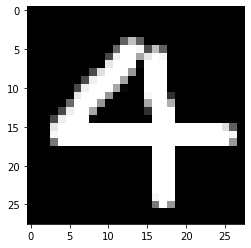

for path in glob.glob('/content/drive/MyDrive/Colab Notebooks/deep_learning/03_mnist_img/*.png'):

img = Image.open(path).convert('L')

img = np.resize(img, (1, 784))

img = 255.0 - (img)

plt.imshow(img.reshape(28, 28), cmap='gray')

plt.show()

pred = model.predict(img)

print(np.round(pred, 4))

print(np.argmax(pred))

Out [35]:

1/1 [==============================] - 0s 17ms/step

[[0. 0. 0. 0. 0. 0. 0. 1. 0. 0.]]

7

1/1 [==============================] - 0s 21ms/step

[[0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]]

9

1/1 [==============================] - 0s 20ms/step

[[0. 1. 0. 0. 0. 0. 0. 0. 0. 0.]]

1

1/1 [==============================] - 0s 19ms/step

[[0. 0. 1. 0. 0. 0. 0. 0. 0. 0.]]

2

1/1 [==============================] - 0s 23ms/step

[[0. 0. 0. 1. 0. 0. 0. 0. 0. 0.]]

3

1/1 [==============================] - 0s 19ms/step

[[0. 0. 0. 0. 1. 0. 0. 0. 0. 0.]]

4

1/1 [==============================] - 0s 17ms/step

[[0. 0. 0. 0. 0. 0. 1. 0. 0. 0.]]

6

1/1 [==============================] - 0s 19ms/step

[[0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]]

8

1/1 [==============================] - 0s 17ms/step

[[0. 0. 0. 0. 0. 0. 0. 0. 1. 0.]]

8

1/1 [==============================] - 0s 18ms/step

[[0. 0. 0. 0. 0. 0. 0. 0. 0. 1.]]

9

모델 저장하기

모델 자체 저장

불러와서 조정은 불가능

In [36]:

model.save('mnist.h5')

In [37]:

from keras.models import load_model

In [38]:

new_model = load_model('mnist.h5')

In [39]:

np.argmax(new_model.predict(x_test)[0])

Out [39]:

313/313 [==============================] - 0s 1ms/step

7

In [40]:

np.argmax(y_test[0])

Out [40]:

7

In [41]:

plt.imshow(x_test[0].reshape(28, 28), cmap='gray')

Out [41]:

<matplotlib.image.AxesImage at 0x7f0683932f40>

가중치만 저장

재조정 가능

In [42]:

model.save_weights('mnist') # 파일 3개 생성됨

In [43]:

# 가중치를 저장했던 모델과 같은 형태의 모델이 필요

model1 = Sequential()

model1.add(Dense(64, activation='relu', input_shape=(784,)))

model1.add(Dense(32, activation='relu'))

model1.add(Dense(10, activation='softmax'))

In [44]:

model1.load_weights('mnist')

Out [44]:

<tensorflow.python.training.tracking.util.CheckpointLoadStatus at 0x7f06838e0880>

In [45]:

np.argmax(new_model.predict(x_test)[0])

Out [45]:

313/313 [==============================] - 0s 1ms/step

7

댓글남기기