Deep Learning (06) - 신경망 / 다중 레이블 분류

신경망 적용해보기

무슨옷과 무슨색?

In [1]:

!pwd

Out [1]:

/content

In [2]:

!mkdir clothes_dataset

In [3]:

!unzip '/content/drive/MyDrive/Colab Notebooks/deep_learning/clothes_dataset.zip' -d ./clothes_dataset/

Out [3]:

inflating: ./clothes_dataset/brown_shoes/30ef20bcb027c99409c81fd6127957502b0e693e.jpg

inflating: ./clothes_dataset/brown_shoes/312cf581fd4ec3678b8794f9f488aa1dad2f2908.jpg

...

inflating: ./clothes_dataset/white_shorts/fb625925bc55cd5c0a147d8214b63d806ac7ecb1.jpg

inflating: ./clothes_dataset/white_shorts/fd76a8fe7e6c5d2fe5f346c3207f75325a781d82.jpg

데이터 정리하기

In [4]:

import numpy as np

import pandas as pd

import tensorflow as tf

import glob as glob

import cv2

all_data = np.array(glob.glob('/content/clothes_dataset/*/*.jpg', recursive=True))

# 색과 옷의 종류를 구별하기 위해 해당되는 label에 1을 삽입합니다.

def check_cc(color, clothes):

labels = np.zeros(11,)

# color check

if(color == 'black'):

labels[0] = 1

color_index = 0

elif(color == 'blue'):

labels[1] = 1

color_index = 1

elif(color == 'brown'):

labels[2] = 1

color_index = 2

elif(color == 'green'):

labels[3] = 1

color_index = 3

elif(color == 'red'):

labels[4] = 1

color_index = 4

elif(color == 'white'):

labels[5] = 1

color_index = 5

# clothes check

if(clothes == 'dress'):

labels[6] = 1

elif(clothes == 'shirt'):

labels[7] = 1

elif(clothes == 'pants'):

labels[8] = 1

elif(clothes == 'shorts'):

labels[9] = 1

elif(clothes == 'shoes'):

labels[10] = 1

return labels, color_index

# label과 color_label을 담을 배열을 선언합니다.

all_labels = np.empty((all_data.shape[0], 11))

all_color_labels = np.empty((all_data.shape[0], 1))

for i, data in enumerate(all_data):

color_and_clothes = all_data[i].split('/')[-2].split('_')

color = color_and_clothes[0]

clothes = color_and_clothes[1]

labels, color_index = check_cc(color, clothes)

all_labels[i] = labels

all_color_labels[i] = color_index

all_labels = np.concatenate((all_labels, all_color_labels), axis = -1)

In [5]:

from sklearn.model_selection import train_test_split

# 훈련, 검증, 테스트 데이터셋으로 나눕니다.

train_x, test_x, train_y, test_y = train_test_split(all_data, all_labels, shuffle = True, test_size = 0.3,

random_state = 99)

train_x, val_x, train_y, val_y = train_test_split(train_x, train_y, shuffle = True, test_size = 0.3,

random_state = 99)

In [6]:

train_df = pd.DataFrame({'image':train_x, 'black':train_y[:, 0], 'blue':train_y[:, 1],

'brown':train_y[:, 2], 'green':train_y[:, 3], 'red':train_y[:, 4],

'white':train_y[:, 5], 'dress':train_y[:, 6], 'shirt':train_y[:, 7],

'pants':train_y[:, 8], 'shorts':train_y[:, 9], 'shoes':train_y[:, 10],

'color':train_y[:, 11]})

val_df = pd.DataFrame({'image':val_x, 'black':val_y[:, 0], 'blue':val_y[:, 1],

'brown':val_y[:, 2], 'green':val_y[:, 3], 'red':val_y[:, 4],

'white':val_y[:, 5], 'dress':val_y[:, 6], 'shirt':val_y[:, 7],

'pants':val_y[:, 8], 'shorts':val_y[:, 9], 'shoes':val_y[:, 10],

'color':val_y[:, 11]})

test_df = pd.DataFrame({'image':test_x, 'black':test_y[:, 0], 'blue':test_y[:, 1],

'brown':test_y[:, 2], 'green':test_y[:, 3], 'red':test_y[:, 4],

'white':test_y[:, 5], 'dress':test_y[:, 6], 'shirt':test_y[:, 7],

'pants':test_y[:, 8], 'shorts':test_y[:, 9], 'shoes':test_y[:, 10],

'color':test_y[:, 11]})

In [7]:

train_df.head()

Out [7]:

| image | black | blue | brown | green | red | white | dress | shirt | pants | shorts | shoes | color | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | /content/clothes_dataset/green_shorts/e74d11d3... | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 3.0 |

| 1 | /content/clothes_dataset/black_dress/f1be32393... | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| 2 | /content/clothes_dataset/black_shoes/04f78f68a... | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 |

| 3 | /content/clothes_dataset/brown_pants/0671d132b... | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 2.0 |

| 4 | /content/clothes_dataset/white_shoes/59803fb01... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 | 5.0 |

In [8]:

!pwd

Out [8]:

/content

In [9]:

!mkdir csv_data

In [10]:

train_df.to_csv('/content/csv_data/train.csv', index=False)

val_df.to_csv('/content/csv_data/val.csv', index=False)

test_df.to_csv('/content/csv_data/test.csv', index=False)

이미지 제네레이터 정의 및 모델 구성하기

In [11]:

from keras.preprocessing.image import ImageDataGenerator

In [12]:

train_datagen = ImageDataGenerator(rescale=1./255) # 불러온 값을 rescale로 지정한 값과 곱함->범위 축소

val_datagen = ImageDataGenerator(rescale=1./255)

# 스탭 계산하는 함수

def get_steps(num_samples, batch_size):

if (num_samples % batch_size) > 0:

return (num_samples // batch_size) + 1

else:

return num_samples // batch_size

- 모델 구성

In [13]:

from keras.models import Sequential

from keras.layers import Dense, Flatten

In [14]:

model = Sequential()

model.add(Flatten(input_shape=(112, 112, 3))) # RGB라 3차원

model.add(Dense(128, activation='relu'))

model.add(Dense(64, activation='relu'))

model.add(Dense(11, activation='sigmoid')) # softmax는 가능성 높은것 중 하나, sigmoid는 모든 값을 0과 1사이값으로

model.compile(optimizer='adam',

loss='binary_crossentropy', # 손실함수: 바이너리 크로스엔트로피

metrics=['binary_accuracy'])

In [15]:

model.summary()

Out [15]:

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

flatten (Flatten) (None, 37632) 0

dense (Dense) (None, 128) 4817024

dense_1 (Dense) (None, 64) 8256

dense_2 (Dense) (None, 11) 715

=================================================================

Total params: 4,825,995

Trainable params: 4,825,995

Non-trainable params: 0

_________________________________________________________________

데이터 제네레이터 정의하기

In [16]:

train_df.columns

Out [16]:

Index(['image', 'black', 'blue', 'brown', 'green', 'red', 'white', 'dress',

'shirt', 'pants', 'shorts', 'shoes', 'color'],

dtype='object')

In [17]:

batch_size = 32

class_col = ['black', 'blue', 'brown', 'green', 'red', 'white',

'dress', 'shirt', 'pants', 'shorts', 'shoes']

In [18]:

# 제네레이터 만들기

train_generator = train_datagen.flow_from_dataframe(

dataframe=train_df, x_col='image', y_col=class_col,

target_size=(112, 112), color_mode='rgb', class_mode='raw',

batch_size=batch_size, shuffle=True, seed=42)

val_generator = val_datagen.flow_from_dataframe(

dataframe=val_df, x_col='image', y_col=class_col,

target_size=(112, 112), color_mode='rgb', class_mode='raw',

batch_size=batch_size, shuffle=True)

Out [18]:

Found 5578 validated image filenames.

Found 2391 validated image filenames.

제네레이터를 통해 모델 학습시키기

In [19]:

model.fit(train_generator,

steps_per_epoch=get_steps(len(train_df), batch_size),

validation_data=val_generator,

validation_steps=get_steps(len(val_df), batch_size),

epochs=10)

Out [19]:

Epoch 1/10

175/175 [==============================] - 31s 158ms/step - loss: 0.6019 - binary_accuracy: 0.8315 - val_loss: 0.3970 - val_binary_accuracy: 0.8343

Epoch 2/10

175/175 [==============================] - 27s 155ms/step - loss: 0.3113 - binary_accuracy: 0.8790 - val_loss: 0.2709 - val_binary_accuracy: 0.8987

Epoch 3/10

175/175 [==============================] - 27s 155ms/step - loss: 0.2832 - binary_accuracy: 0.8913 - val_loss: 0.2794 - val_binary_accuracy: 0.8905

Epoch 4/10

175/175 [==============================] - 29s 164ms/step - loss: 0.2347 - binary_accuracy: 0.9062 - val_loss: 0.2622 - val_binary_accuracy: 0.9001

Epoch 5/10

175/175 [==============================] - 27s 152ms/step - loss: 0.2218 - binary_accuracy: 0.9120 - val_loss: 0.2417 - val_binary_accuracy: 0.9004

Epoch 6/10

175/175 [==============================] - 27s 154ms/step - loss: 0.2011 - binary_accuracy: 0.9193 - val_loss: 0.2290 - val_binary_accuracy: 0.9156

Epoch 7/10

175/175 [==============================] - 27s 153ms/step - loss: 0.1905 - binary_accuracy: 0.9244 - val_loss: 0.2051 - val_binary_accuracy: 0.9205

Epoch 8/10

175/175 [==============================] - 28s 159ms/step - loss: 0.1802 - binary_accuracy: 0.9290 - val_loss: 0.2301 - val_binary_accuracy: 0.9138

Epoch 9/10

175/175 [==============================] - 32s 181ms/step - loss: 0.1873 - binary_accuracy: 0.9262 - val_loss: 0.1955 - val_binary_accuracy: 0.9252

Epoch 10/10

175/175 [==============================] - 27s 154ms/step - loss: 0.1659 - binary_accuracy: 0.9346 - val_loss: 0.1960 - val_binary_accuracy: 0.9233

<keras.callbacks.History at 0x7f1867fb1790>

테스트 데이터 예측하기

In [20]:

test_datagen = ImageDataGenerator(rescale=1./255)

test_generator = test_datagen.flow_from_dataframe(

dataframe=test_df, x_col='image',

target_size=(112, 112), color_mode='rgb', class_mode=None,

batch_size=batch_size, shuffle=False)

preds = model.predict(test_generator, steps=get_steps(len(test_df), batch_size), verbose=1)

Out [20]:

Found 3416 validated image filenames.

107/107 [==============================] - 12s 110ms/step

In [21]:

np.round(preds[0], 2)

Out [21]:

array([0. , 0. , 0. , 1. , 0. , 0. , 0. , 1. , 0. , 0.17, 0. ],

dtype=float32)

In [22]:

import matplotlib.pyplot as plt

In [23]:

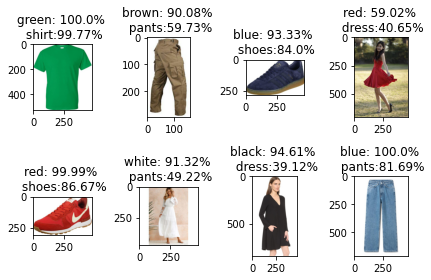

do_preds = preds[:8]

for i, pred in enumerate(do_preds):

plt.subplot(2, 4, i+1)

prob = zip(class_col, list(pred))

prob = sorted(list(prob), key=lambda x: x[1], reverse=True)[:2] # 내림차순 정렬

image = cv2.imread(test_df['image'][i]) # 경로 지정 읽어오기

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB) # BGR 패턴을 RGB 패턴으로 변경

plt.imshow(image)

plt.title(f'{prob[0][0]}: {round(prob[0][1]*100, 2)}% \n {prob[1][0]}:{round(prob[1][1]*100, 2)}%')

plt.tight_layout()

plt.show()

Out [23]:

실제 데이터 가져와 예측하기

In [24]:

data_datagen = ImageDataGenerator(rescale=1./255)

data_glob = sorted(glob.glob('/content/drive/MyDrive/Colab Notebooks/deep_learning/06_clothes_img/*/*.png'))

data_generator = data_datagen.flow_from_directory(

directory='/content/drive/MyDrive/Colab Notebooks/deep_learning/06_clothes_img',

target_size=(112, 112), color_mode='rgb', class_mode=None,

batch_size=batch_size, shuffle=False)

Out [24]:

Found 3 images belonging to 1 classes.

In [25]:

result = model.predict(data_generator, steps=get_steps(3, batch_size), verbose=1)

Out [25]:

1/1 [==============================] - 0s 35ms/step

In [26]:

np.round(result, 2)

Out [26]:

array([[0. , 0. , 0. , 0. , 1. , 0. , 0.01, 0. , 0.22, 0. , 0.64],

[0. , 1. , 0. , 0. , 0. , 0. , 0.01, 0. , 0.05, 0.04, 0.65],

[0.02, 0. , 1. , 0.02, 0.14, 0. , 0. , 0. , 0.92, 0.08, 0.45]],

dtype=float32)

In [27]:

for i, pred in enumerate(result):

plt.subplot(1, 3, i+1)

prob = zip(class_col, list(pred))

prob = sorted(list(prob), key=lambda x: x[1], reverse=True)[:2] # 내림차순 정렬

image = cv2.imread(data_glob[i]) # 경로 지정 읽어오기

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB) # BGR 패턴을 RGB 패턴으로 변경

plt.imshow(image)

plt.title(f'{prob[0][0]}: {round(prob[0][1]*100, 2)}% \n {prob[1][0]}:{round(prob[1][1]*100, 2)}%')

plt.tight_layout()

plt.show()

Out [27]:

Reference

- 이 포스트는 SeSAC 인공지능 자연어처리, 컴퓨터비전 기술을 활용한 응용 SW 개발자 양성 과정 - 심선조 강사님의 강의를 정리한 내용입니다.

댓글남기기