Deep Learning (07) - CNN / Fashion-MNIST

컨볼루션 신경망

- 컨볼루션 신경망(Convolution Neural Network)

이미지 데이터를 다루는 컴퓨터 비전 분야에서 활발하게 사용중이다.

일단 사용해보기

데이터 살펴보기

In [1]:

from keras.datasets import fashion_mnist

In [2]:

# 데이터 받기

(x_train, y_train), (x_test, y_test) = fashion_mnist.load_data()

Out [2]:

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/train-labels-idx1-ubyte.gz

29515/29515 [==============================] - 0s 0us/step

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/train-images-idx3-ubyte.gz

26421880/26421880 [==============================] - 1s 0us/step

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/t10k-labels-idx1-ubyte.gz

5148/5148 [==============================] - 0s 0us/step

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/t10k-images-idx3-ubyte.gz

4422102/4422102 [==============================] - 0s 0us/step

In [3]:

import matplotlib.pyplot as plt

import numpy as np

In [4]:

x_train.shape

Out [4]:

(60000, 28, 28)

In [5]:

np.random.seed(777)

class_name = ['T-shirt/top','Trouser','Pullover','Dress','Coat','Sandal','Shirt','Sneaker','Bag','Ankle boot']

sample_size = 9

random_idx = np.random.randint(60000, size=sample_size)

# 범위 축소

x_train = np.reshape(x_train/255, (-1, 28, 28, 1))

x_test = np.reshape(x_test/255, (-1, 28, 28, 1))

In [6]:

x_train.shape

Out [6]:

(60000, 28, 28, 1)

In [7]:

x_train.min(), x_train.max()

Out [7]:

(0.0, 1.0)

In [8]:

y_train[0]

Out [8]:

9

- 레이블을 범주형 형태로 변경

In [9]:

from keras.utils import to_categorical

In [10]:

y_train = to_categorical(y_train)

y_test = to_categorical(y_test)

In [11]:

y_train[0]

Out [11]:

array([0., 0., 0., 0., 0., 0., 0., 0., 0., 1.], dtype=float32)

- 검증 데이터셋 만들기

In [12]:

from sklearn.model_selection import train_test_split

In [13]:

x_train, x_val, y_train, y_val = train_test_split(x_train, y_train, test_size=0.3, random_state=777)

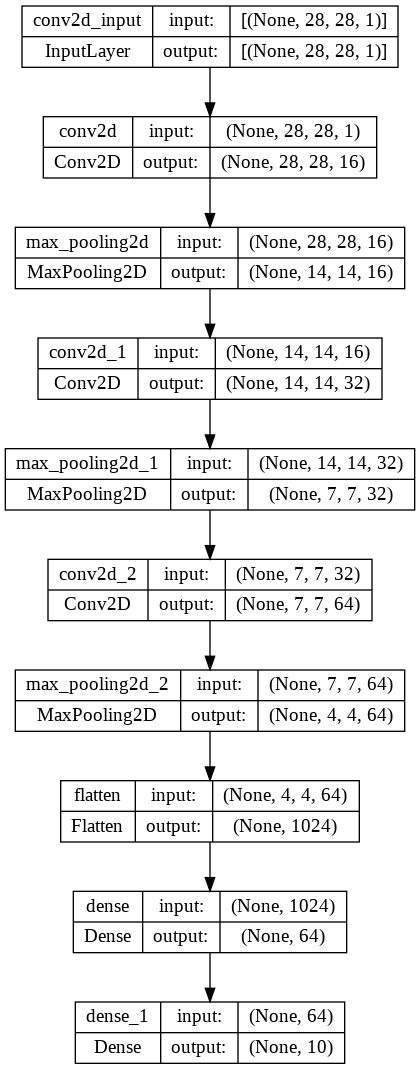

모델 구성하기

In [14]:

from keras.models import Sequential

from keras.layers import Dense, Flatten, Conv2D, MaxPool2D

In [15]:

model = Sequential([

Conv2D(filters = 16, kernel_size=3, strides=(1, 1), padding='same', activation='relu', input_shape=(28, 28, 1)),

MaxPool2D(pool_size=(2, 2), strides=2, padding='same'),

Conv2D(filters = 32, kernel_size=3, strides=(1, 1), padding='same', activation='relu'),

MaxPool2D(pool_size=(2, 2), strides=2, padding='same'),

Conv2D(filters = 64, kernel_size=3, strides=(1, 1), padding='same', activation='relu'),

MaxPool2D(pool_size=(2, 2), strides=2, padding='same'),

Flatten(),

# 분류기

Dense(64, activation='relu'),

Dense(10, activation='softmax')

])

model.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['acc'])

model.summary()

Out [15]:

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (None, 28, 28, 16) 160

max_pooling2d (MaxPooling2D (None, 14, 14, 16) 0

)

conv2d_1 (Conv2D) (None, 14, 14, 32) 4640

max_pooling2d_1 (MaxPooling (None, 7, 7, 32) 0

2D)

conv2d_2 (Conv2D) (None, 7, 7, 64) 18496

max_pooling2d_2 (MaxPooling (None, 4, 4, 64) 0

2D)

flatten (Flatten) (None, 1024) 0

dense (Dense) (None, 64) 65600

dense_1 (Dense) (None, 10) 650

=================================================================

Total params: 89,546

Trainable params: 89,546

Non-trainable params: 0

_________________________________________________________________

In [16]:

model.fit(x_train, y_train, epochs=30, batch_size=32, validation_data=(x_val, y_val))

Out [16]:

Epoch 1/30

1313/1313 [==============================] - 18s 6ms/step - loss: 0.5233 - acc: 0.8086 - val_loss: 0.3687 - val_acc: 0.8671

Epoch 2/30

1313/1313 [==============================] - 7s 5ms/step - loss: 0.3337 - acc: 0.8782 - val_loss: 0.2983 - val_acc: 0.8936

Epoch 3/30

1313/1313 [==============================] - 8s 6ms/step - loss: 0.2834 - acc: 0.8961 - val_loss: 0.2718 - val_acc: 0.9027

Epoch 4/30

1313/1313 [==============================] - 8s 6ms/step - loss: 0.2531 - acc: 0.9058 - val_loss: 0.2600 - val_acc: 0.9050

Epoch 5/30

1313/1313 [==============================] - 8s 6ms/step - loss: 0.2253 - acc: 0.9162 - val_loss: 0.2609 - val_acc: 0.9068

Epoch 6/30

1313/1313 [==============================] - 8s 6ms/step - loss: 0.2061 - acc: 0.9250 - val_loss: 0.2502 - val_acc: 0.9103

Epoch 7/30

1313/1313 [==============================] - 12s 9ms/step - loss: 0.1866 - acc: 0.9311 - val_loss: 0.2422 - val_acc: 0.9138

Epoch 8/30

1313/1313 [==============================] - 7s 5ms/step - loss: 0.1702 - acc: 0.9368 - val_loss: 0.2570 - val_acc: 0.9101

Epoch 9/30

1313/1313 [==============================] - 7s 5ms/step - loss: 0.1575 - acc: 0.9400 - val_loss: 0.2342 - val_acc: 0.9155

Epoch 10/30

1313/1313 [==============================] - 7s 5ms/step - loss: 0.1428 - acc: 0.9471 - val_loss: 0.2541 - val_acc: 0.9109

Epoch 11/30

1313/1313 [==============================] - 7s 5ms/step - loss: 0.1293 - acc: 0.9508 - val_loss: 0.2574 - val_acc: 0.9127

Epoch 12/30

1313/1313 [==============================] - 8s 6ms/step - loss: 0.1188 - acc: 0.9552 - val_loss: 0.2733 - val_acc: 0.9138

Epoch 13/30

1313/1313 [==============================] - 8s 6ms/step - loss: 0.1072 - acc: 0.9598 - val_loss: 0.2725 - val_acc: 0.9142

Epoch 14/30

1313/1313 [==============================] - 8s 6ms/step - loss: 0.0961 - acc: 0.9647 - val_loss: 0.2842 - val_acc: 0.9132

Epoch 15/30

1313/1313 [==============================] - 8s 6ms/step - loss: 0.0856 - acc: 0.9669 - val_loss: 0.3236 - val_acc: 0.9108

Epoch 16/30

1313/1313 [==============================] - 7s 5ms/step - loss: 0.0790 - acc: 0.9700 - val_loss: 0.3159 - val_acc: 0.9141

Epoch 17/30

1313/1313 [==============================] - 7s 5ms/step - loss: 0.0728 - acc: 0.9721 - val_loss: 0.3244 - val_acc: 0.9146

Epoch 18/30

1313/1313 [==============================] - 8s 6ms/step - loss: 0.0683 - acc: 0.9742 - val_loss: 0.3384 - val_acc: 0.9129

Epoch 19/30

1313/1313 [==============================] - 8s 6ms/step - loss: 0.0607 - acc: 0.9775 - val_loss: 0.3859 - val_acc: 0.9094

Epoch 20/30

1313/1313 [==============================] - 7s 5ms/step - loss: 0.0558 - acc: 0.9792 - val_loss: 0.4072 - val_acc: 0.9106

Epoch 21/30

1313/1313 [==============================] - 8s 6ms/step - loss: 0.0520 - acc: 0.9811 - val_loss: 0.3798 - val_acc: 0.9124

Epoch 22/30

1313/1313 [==============================] - 7s 5ms/step - loss: 0.0517 - acc: 0.9801 - val_loss: 0.4326 - val_acc: 0.9110

Epoch 23/30

1313/1313 [==============================] - 8s 6ms/step - loss: 0.0449 - acc: 0.9832 - val_loss: 0.4564 - val_acc: 0.9134

Epoch 24/30

1313/1313 [==============================] - 8s 6ms/step - loss: 0.0462 - acc: 0.9828 - val_loss: 0.4471 - val_acc: 0.9052

Epoch 25/30

1313/1313 [==============================] - 8s 6ms/step - loss: 0.0410 - acc: 0.9845 - val_loss: 0.4765 - val_acc: 0.9093

Epoch 26/30

1313/1313 [==============================] - 8s 6ms/step - loss: 0.0388 - acc: 0.9851 - val_loss: 0.4571 - val_acc: 0.9118

Epoch 27/30

1313/1313 [==============================] - 7s 5ms/step - loss: 0.0379 - acc: 0.9860 - val_loss: 0.4706 - val_acc: 0.9124

Epoch 28/30

1313/1313 [==============================] - 7s 5ms/step - loss: 0.0339 - acc: 0.9873 - val_loss: 0.5175 - val_acc: 0.9067

Epoch 29/30

1313/1313 [==============================] - 7s 5ms/step - loss: 0.0362 - acc: 0.9870 - val_loss: 0.5245 - val_acc: 0.9154

Epoch 30/30

1313/1313 [==============================] - 7s 5ms/step - loss: 0.0313 - acc: 0.9882 - val_loss: 0.5405 - val_acc: 0.9089

<keras.callbacks.History at 0x7ff51007c520>

- 모델 구조 확인하기

In [17]:

from keras.utils import plot_model

In [18]:

plot_model(model, './model.png', show_shapes=True)

Out [18]:

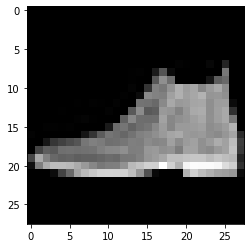

- 예측하기

In [19]:

pred = model.predict(x_test)

Out [19]:

313/313 [==============================] - 1s 2ms/step

In [20]:

pred[0]

Out [20]:

array([2.46575831e-24, 1.15496255e-26, 8.73077487e-24, 1.50579012e-21,

6.77957373e-29, 5.91380470e-17, 1.37762093e-23, 1.45185903e-11,

9.42009545e-27, 1.00000000e+00], dtype=float32)

In [21]:

class_name[np.argmax(np.round(pred[0], 2))]

Out [21]:

'Ankle boot'

In [22]:

plt.imshow(x_test[0].reshape(28, 28), cmap='gray')

Out [22]:

<matplotlib.image.AxesImage at 0x7ff4aa6654f0>

모델 저장하기

In [23]:

model.save_weights('/content/drive/MyDrive/Colab Notebooks/deep_learning/model/07_cnn_fashion_mnist/model')

- 모델 불러오기

In [24]:

model1 = Sequential([

Conv2D(filters = 16, kernel_size=3, strides=(1, 1), padding='same', activation='relu', input_shape=(28, 28, 1)),

MaxPool2D(pool_size=(2, 2), strides=2, padding='same'),

Conv2D(filters = 32, kernel_size=3, strides=(1, 1), padding='same', activation='relu'),

MaxPool2D(pool_size=(2, 2), strides=2, padding='same'),

Conv2D(filters = 64, kernel_size=3, strides=(1, 1), padding='same', activation='relu'),

MaxPool2D(pool_size=(2, 2), strides=2, padding='same'),

Flatten(),

# 분류기

Dense(64, activation='relu'),

Dense(10, activation='softmax')

])

In [25]:

model1.load_weights('/content/drive/MyDrive/Colab Notebooks/deep_learning/model/07_cnn_fashion_mnist/model')

Out [25]:

<tensorflow.python.training.tracking.util.CheckpointLoadStatus at 0x7ff5903a0a00>

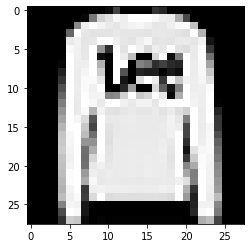

- 불러온 모델로 예측하기

In [26]:

pred = model1.predict(x_test)

print(class_name[np.argmax(np.round(pred[1], 2))])

plt.imshow(x_test[1].reshape(28, 28), cmap='gray')

Out [26]:

313/313 [==============================] - 1s 2ms/step

Pullover

<matplotlib.image.AxesImage at 0x7ff4aa0f06d0>

Reference

- 이 포스트는 SeSAC 인공지능 자연어처리, 컴퓨터비전 기술을 활용한 응용 SW 개발자 양성 과정 - 심선조 강사님의 강의를 정리한 내용입니다.

댓글남기기