Deep Learning (18-2) - YOLOv3 TensorFlow

YOLO (You Only Look Once)

YOLOv3 모델 (TensorFlow)

- 코드: https://github.com/zzh8829/yolov3-tf2

모델 다운로드

# keras 버전 맞춰주기

!pip uninstall -y tensorflow keras

Found existing installation: tensorflow 2.9.2

Uninstalling tensorflow-2.9.2:

Successfully uninstalled tensorflow-2.9.2

Found existing installation: keras 2.9.0

Uninstalling keras-2.9.0:

Successfully uninstalled keras-2.9.0

# 깃헙에서 가져오기

!git clone https://github.com/zzh8829/yolov3-tf2

Cloning into 'yolov3-tf2'...

remote: Enumerating objects: 442, done.

remote: Counting objects: 100% (9/9), done.

remote: Compressing objects: 100% (9/9), done.

remote: Total 442 (delta 3), reused 0 (delta 0), pack-reused 433

Receiving objects: 100% (442/442), 4.24 MiB | 15.97 MiB/s, done.

Resolving deltas: 100% (251/251), done.

%cd yolov3-tf2

!pip install -r requirements-gpu.txt

!pip install keras==2.4.2

/content/yolov3-tf2

Looking in indexes: https://pypi.org/simple, https://us-python.pkg.dev/colab-wheels/public/simple/

Obtaining file:///content/yolov3-tf2 (from -r requirements-gpu.txt (line 6))

Collecting tensorflow-gpu==2.5.1

...

Successfully installed absl-py-0.15.0 grpcio-1.34.1 keras-nightly-2.5.0.dev2021032900 numpy-1.19.5 opencv-python-4.2.0.32 tensorflow-estimator-2.5.0 tensorflow-gpu-2.5.1 termcolor-1.1.0 typing-extensions-3.7.4.3 wrapt-1.12.1 yolov3-tf2-0.1

Looking in indexes: https://pypi.org/simple, https://us-python.pkg.dev/colab-wheels/public/simple/

Collecting keras==2.4.2

Downloading Keras-2.4.2-py2.py3-none-any.whl (170 kB)

|████████████████████████████████| 170 kB 32.7 MB/s

Requirement already satisfied: h5py in /usr/local/lib/python3.8/dist-packages (from keras==2.4.2) (3.1.0)

Requirement already satisfied: numpy>=1.9.1 in /usr/local/lib/python3.8/dist-packages (from keras==2.4.2) (1.19.5)

Requirement already satisfied: scipy>=0.14 in /usr/local/lib/python3.8/dist-packages (from keras==2.4.2) (1.7.3)

Requirement already satisfied: pyyaml in /usr/local/lib/python3.8/dist-packages (from keras==2.4.2) (6.0)

Installing collected packages: keras

Successfully installed keras-2.4.2

모델 파일 변환

DarkNet의 모델파일을 Keras에서 사용할 수 있는 모델 파일로 변환

convert.py: 변환 실행 파일yolov3.weight: Darknet으로 학습된 모델 파일-

yolov3.tf: Keras Yolov3 모델 - Yolov3 Weight: https://pjreddie.com/media/files/yolov3.weights

!pwd

/content/yolov3-tf2

!wget https://pjreddie.com/media/files/yolov3.weights -O data/yolov3.weights

--2022-12-17 11:41:17-- https://pjreddie.com/media/files/yolov3.weights

Resolving pjreddie.com (pjreddie.com)... 128.208.4.108

Connecting to pjreddie.com (pjreddie.com)|128.208.4.108|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 248007048 (237M) [application/octet-stream]

Saving to: ‘data/yolov3.weights’

data/yolov3.weights 100%[===================>] 236.52M 14.7MB/s in 16s

2022-12-17 11:41:34 (14.6 MB/s) - ‘data/yolov3.weights’ saved [248007048/248007048]

!python convert.py --weights ./data/yolov3.weights --output ./checkpoints/yolov3.tf

2022-12-17 11:41:35.275002: I tensorflow/stream_executor/platform/default/dso_loader.cc:53] Successfully opened dynamic library libcudart.so.11.0

2022-12-17 11:41:36.685175: I tensorflow/stream_executor/platform/default/dso_loader.cc:53] Successfully opened dynamic library libcuda.so.1

2022-12-17 11:41:37.320239: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:937] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

...

I1217 11:41:49.875230 140643411785600 convert.py:31] sanity check passed

I1217 11:41:51.007428 140643411785600 convert.py:34] weights saved

Detector

import time

import cv2

import numpy as np

import tensorflow as tf

from yolov3_tf2.models import YoloV3

from yolov3_tf2.dataset import transform_images, load_tfrecord_dataset

from yolov3_tf2.utils import draw_outputs

from absl import app, logging, flags

from absl.flags import FLAGS

from tensorflow.keras.preprocessing import image

import matplotlib.pyplot as plt

from IPython.display import Image, display

flags.DEFINE_string('classes', './data/coco.names', 'classes file') # 이름, 경로, 설명

flags.DEFINE_string('weights', './checkpoints/yolov3.tf', 'weights file')

flags.DEFINE_boolean('tiny', False, 'yolov3 or yolov3_tiny') # small size 탐색 모델 선택

flags.DEFINE_integer('size', 416, 'resize images to') # 이미지 리사이즈 크기

flags.DEFINE_string('image', './data/girl.png', 'input image') # 기본 이미지 경로

flags.DEFINE_string('tfrecord', None, 'tfrecord')

flags.DEFINE_string('output', './output.jpg', 'output image') # output 경로

flags.DEFINE_integer('num_classes', 80, 'number of classes')

# 초기화 해서 파싱

app._run_init(['yolov3'], app.parse_flags_with_usage)

# 실행환경 설정

physical_devices = tf.config.experimental.list_physical_devices('GPU')

tf.config.experimental.set_memory_growth(physical_devices[0], True)

# yolo 객체 생성

yolo = YoloV3(classes=FLAGS.num_classes)

yolo.load_weights(FLAGS.weights).expect_partial()

class_names = [c.strip() for c in open(FLAGS.classes).readlines()] # 한줄씩 열어 리스트에 저장

W1217 11:41:58.950393 140274869294976 deprecation.py:528] From /usr/local/lib/python3.8/dist-packages/tensorflow/python/ops/array_ops.py:5043: calling gather (from tensorflow.python.ops.array_ops) with validate_indices is deprecated and will be removed in a future version.

Instructions for updating:

The `validate_indices` argument has no effect. Indices are always validated on CPU and never validated on GPU.

def detector():

img_raw = tf.image.decode_image(open(FLAGS.image, 'rb').read(), channels=3)

img = tf.expand_dims(img_raw, 0) # 맨 앞에 차원을 추가하여 4차원로 만듬

img = transform_images(img, FLAGS.size) # 모델에 넣기 위한 resize

t1 = time.time() # 실행시 시간

boxes, scores, classes, nums = yolo(img) # 해당 객체의 박스위치, 점수, 구분한 클래스, 객체의 갯수 정보를 반환

t2 = time.time() # 종료시 시간

print(f'time:{t2-t1}') # detect에 걸린 시간

for i in range(nums[0]):

print('{}, {}, {}'.format(class_names[classes[0][i]],

np.array(scores[0][i]),

np.array(boxes[0][i])

))

img = cv2.cvtColor(img_raw.numpy(), cv2.COLOR_RGB2BGR) # RGB에서 BGR로 이미지 변경

img = draw_outputs(img, (boxes, scores, classes, nums), class_names)

return img

!ls data

checkpoint girl.png meme.jpg street.jpg voc2012.names

coco.names meme2.jpeg meme_out.jpg street_out.jpg yolov3.weights

FLAGS.image

'./data/girl.png'

Image(filename=FLAGS.image, width=500)

output = detector()

display(Image(data=bytes(cv2.imencode('.jpg', output)[1]), width=500)) # output을 jpg형태로 변환하여 표시

time:2.6288774013519287

person, 0.9997697472572327, [0.06754467 0.03718701 0.967988 0.9650754 ]

chair, 0.9255483150482178, [0.0185187 0.34200275 0.17385134 0.62783295]

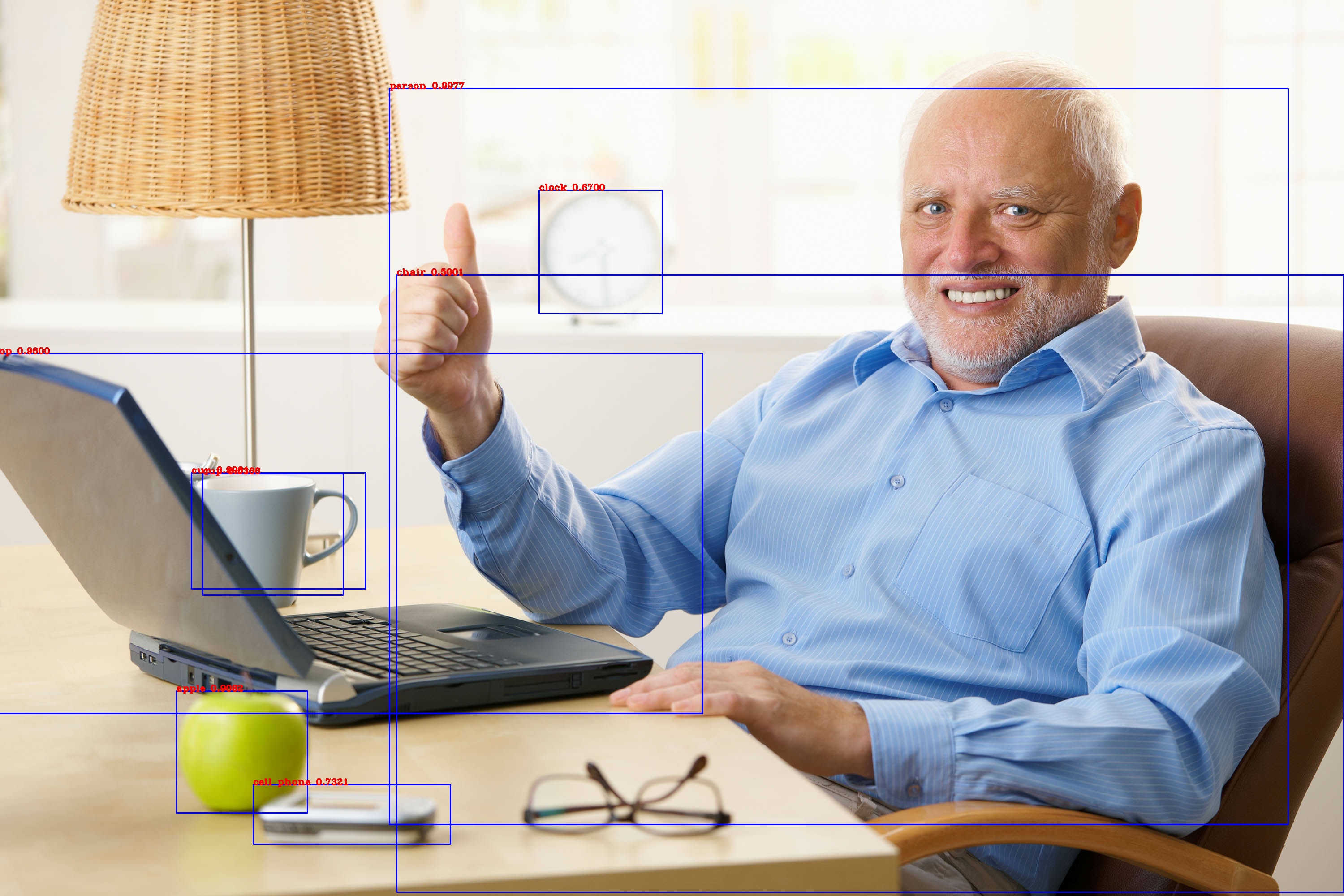

FLAGS.image = './data/meme.jpg'

Image(filename=FLAGS.image, width=500)

output = detector()

display(Image(data=bytes(cv2.imencode('.jpg', output)[1]), width=500))

time:0.12727093696594238

cup, 0.9980731010437012, [0.14266218 0.52777606 0.27184254 0.65748256]

person, 0.9976975321769714, [0.28982913 0.09854656 0.95846486 0.9202166 ]

laptop, 0.9599869847297668, [-0.01710668 0.3949369 0.5229728 0.7962775 ]

apple, 0.9081627726554871, [0.13114463 0.77143586 0.22893356 0.90729535]

cell phone, 0.7321377396583557, [0.18850097 0.87569416 0.3350185 0.9422209 ]

clock, 0.6700013279914856, [0.4010809 0.21244614 0.49267417 0.35021502]

cup, 0.5366386771202087, [0.15088229 0.52855617 0.25541586 0.66439587]

chair, 0.500112771987915, [0.29507315 0.30677179 0.99988604 0.9955945 ]

FLAGS.image = './data/meme2.jpeg'

Image(filename=FLAGS.image, width=500)

output = detector()

display(Image(data=bytes(cv2.imencode('.jpg', output)[1]), width=500))

time:0.1269540786743164

person, 0.9996726512908936, [0.10497867 0.17179069 0.47821975 0.9957652 ]

person, 0.9945406913757324, [0.45602348 0.06149811 0.72744524 0.9832987 ]

person, 0.9557743072509766, [0.706931 0.22946644 0.91559696 1.020992 ]

person, 0.9102910757064819, [0.93971944 0.43444303 1.0022458 0.89688945]

person, 0.619599461555481, [0.08753047 0.41167545 0.18400964 0.7732612 ]

person, 0.5340757369995117, [0.4782502 0.07239679 0.7743185 0.9673748 ]

Reference

- 이 포스트는 SeSAC 인공지능 자연어처리, 컴퓨터비전 기술을 활용한 응용 SW 개발자 양성 과정 - 심선조 강사님의 강의를 정리한 내용입니다.

댓글남기기