Deep Learning (18-4) - YOLOv5 포트홀 탐지 모델

YOLO (You Only Look Once)

포트홀 탐지 모델

YOLOv5 모델 다운로드

In [1]:

%cd /content

!git clone https://github.com/ultralytics/yolov5

%cd yolov5

!pip install -qr requirements.txt

Out [1]:

/content

Cloning into 'yolov5'...

remote: Enumerating objects: 14566, done.

remote: Counting objects: 100% (88/88), done.

remote: Compressing objects: 100% (62/62), done.

remote: Total 14566 (delta 44), reused 50 (delta 26), pack-reused 14478

Receiving objects: 100% (14566/14566), 13.68 MiB | 7.60 MiB/s, done.

Resolving deltas: 100% (10024/10024), done.

/content/yolov5

|████████████████████████████████| 182 kB 29.1 MB/s

|████████████████████████████████| 62 kB 1.4 MB/s

|████████████████████████████████| 1.6 MB 54.9 MB/s

데이터셋 다운로드

- 포트홀 데이터셋: https://public.roboflow.com/object-detection/pothole

In [2]:

!pwd

Out [2]:

/content/yolov5

In [3]:

%mkdir /content/yolov5/pothole

%cd /content/yolov5/pothole

!curl -L "https://public.roboflow.com/ds/p8RvsTNeEI?key=J6HYjrLfDA" > roboflow.zip; unzip roboflow.zip; rm roboflow.zip

Out [3]:

/content/yolov5/pothole

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 901 100 901 0 0 1912 0 --:--:-- --:--:-- --:--:-- 1912

100 46.0M 100 46.0M 0 0 9193k 0 0:00:05 0:00:05 --:--:-- 11.3M

Archive: roboflow.zip

extracting: README.dataset.txt

extracting: README.roboflow.txt

extracting: data.yaml

creating: test/

creating: test/images/

...

extracting: valid/labels/img-93_jpg.rf.7dc83bef4593a070f4cea15ebec8a527.txt

extracting: valid/labels/img-94_jpg.rf.26ce6c0878886e2b49b0191cf4f952bb.txt

In [4]:

# 파일 목록 불러오기

from glob import glob

train_img_list = glob('/content/yolov5/pothole/train/images/*.jpg')

test_img_list = glob('/content/yolov5/pothole/test/images/*.jpg')

valid_img_list = glob('/content/yolov5/pothole/valid/images/*.jpg')

len(train_img_list), len(test_img_list), len(valid_img_list)

Out [4]:

(465, 67, 133)

In [5]:

# 파일 목록 실제 파일로 저장

with open('/content/yolov5/pothole/train.txt', 'w') as f:

f.write('\n'.join(train_img_list)+'\n')

with open('/content/yolov5/pothole/test.txt', 'w') as f:

f.write('\n'.join(test_img_list)+'\n')

with open('/content/yolov5/pothole/valid.txt', 'w') as f:

f.write('\n'.join(valid_img_list)+'\n')

In [6]:

# yaml 파일 수정을 위한 함수

from IPython.core.magic import register_line_cell_magic

@register_line_cell_magic

def writetemplate(line, cell):

with open(line, 'w') as f:

f.write(cell.format(**globals()))

In [7]:

%cat /content/yolov5/pothole/data.yaml

Out [7]:

train: ../train/images

val: ../valid/images

nc: 1

names: ['pothole']

In [8]:

%%writetemplate /content/yolov5/pothole/data.yaml

train: ./pothole/train/images

test: ./pothole/test/images

val: ./pothole/valid/images

nc: 1

names: ['pothole']

In [9]:

%cat /content/yolov5/pothole/data.yaml

Out [9]:

train: ./pothole/train/images

test: ./pothole/test/images

val: ./pothole/valid/images

nc: 1

names: ['pothole']

모델 구성

In [10]:

import yaml

with open('/content/yolov5/pothole/data.yaml', 'r') as stream:

num_classes = str(yaml.safe_load(stream)['nc']) # 클래스 갯수 불러오기

num_classes

Out [10]:

'1'

In [11]:

# 받아온 모델 정보 확인

%cat /content/yolov5/models/yolov5s.yaml

Out [11]:

# YOLOv5 🚀 by Ultralytics, GPL-3.0 license

# Parameters

nc: 80 # number of classes

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

anchors:

- [10,13, 16,30, 33,23] # P3/8

- [30,61, 62,45, 59,119] # P4/16

- [116,90, 156,198, 373,326] # P5/32

# YOLOv5 v6.0 backbone

backbone:

# [from, number, module, args]

[[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

[-1, 1, Conv, [128, 3, 2]], # 1-P2/4

[-1, 3, C3, [128]],

[-1, 1, Conv, [256, 3, 2]], # 3-P3/8

[-1, 6, C3, [256]],

[-1, 1, Conv, [512, 3, 2]], # 5-P4/16

[-1, 9, C3, [512]],

[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

[-1, 3, C3, [1024]],

[-1, 1, SPPF, [1024, 5]], # 9

]

# YOLOv5 v6.0 head

head:

[[-1, 1, Conv, [512, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 6], 1, Concat, [1]], # cat backbone P4

[-1, 3, C3, [512, False]], # 13

[-1, 1, Conv, [256, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 4], 1, Concat, [1]], # cat backbone P3

[-1, 3, C3, [256, False]], # 17 (P3/8-small)

[-1, 1, Conv, [256, 3, 2]],

[[-1, 14], 1, Concat, [1]], # cat head P4

[-1, 3, C3, [512, False]], # 20 (P4/16-medium)

[-1, 1, Conv, [512, 3, 2]],

[[-1, 10], 1, Concat, [1]], # cat head P5

[-1, 3, C3, [1024, False]], # 23 (P5/32-large)

[[17, 20, 23], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)

]

In [12]:

# 사본 커스텀 저장

%%writetemplate /content/yolov5/models/custom_yolov5s.yaml

# Parameters

nc: {num_classes} # number of classes

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

anchors:

- [10,13, 16,30, 33,23] # P3/8

- [30,61, 62,45, 59,119] # P4/16

- [116,90, 156,198, 373,326] # P5/32

# YOLOv5 v6.0 backbone

backbone:

# [from, number, module, args]

[[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

[-1, 1, Conv, [128, 3, 2]], # 1-P2/4

[-1, 3, C3, [128]],

[-1, 1, Conv, [256, 3, 2]], # 3-P3/8

[-1, 6, C3, [256]],

[-1, 1, Conv, [512, 3, 2]], # 5-P4/16

[-1, 9, C3, [512]],

[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

[-1, 3, C3, [1024]],

[-1, 1, SPPF, [1024, 5]], # 9

]

# YOLOv5 v6.0 head

head:

[[-1, 1, Conv, [512, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 6], 1, Concat, [1]], # cat backbone P4

[-1, 3, C3, [512, False]], # 13

[-1, 1, Conv, [256, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 4], 1, Concat, [1]], # cat backbone P3

[-1, 3, C3, [256, False]], # 17 (P3/8-small)

[-1, 1, Conv, [256, 3, 2]],

[[-1, 14], 1, Concat, [1]], # cat head P4

[-1, 3, C3, [512, False]], # 20 (P4/16-medium)

[-1, 1, Conv, [512, 3, 2]],

[[-1, 10], 1, Concat, [1]], # cat head P5

[-1, 3, C3, [1024, False]], # 23 (P5/32-large)

[[17, 20, 23], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)

]

In [13]:

# 수정 확인

%cat /content/yolov5/models/custom_yolov5s.yaml

Out [13]:

# Parameters

nc: 1 # number of classes

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

anchors:

- [10,13, 16,30, 33,23] # P3/8

- [30,61, 62,45, 59,119] # P4/16

- [116,90, 156,198, 373,326] # P5/32

# YOLOv5 v6.0 backbone

backbone:

# [from, number, module, args]

[[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2

[-1, 1, Conv, [128, 3, 2]], # 1-P2/4

[-1, 3, C3, [128]],

[-1, 1, Conv, [256, 3, 2]], # 3-P3/8

[-1, 6, C3, [256]],

[-1, 1, Conv, [512, 3, 2]], # 5-P4/16

[-1, 9, C3, [512]],

[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32

[-1, 3, C3, [1024]],

[-1, 1, SPPF, [1024, 5]], # 9

]

# YOLOv5 v6.0 head

head:

[[-1, 1, Conv, [512, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 6], 1, Concat, [1]], # cat backbone P4

[-1, 3, C3, [512, False]], # 13

[-1, 1, Conv, [256, 1, 1]],

[-1, 1, nn.Upsample, [None, 2, 'nearest']],

[[-1, 4], 1, Concat, [1]], # cat backbone P3

[-1, 3, C3, [256, False]], # 17 (P3/8-small)

[-1, 1, Conv, [256, 3, 2]],

[[-1, 14], 1, Concat, [1]], # cat head P4

[-1, 3, C3, [512, False]], # 20 (P4/16-medium)

[-1, 1, Conv, [512, 3, 2]],

[[-1, 10], 1, Concat, [1]], # cat head P5

[-1, 3, C3, [1024, False]], # 23 (P5/32-large)

[[17, 20, 23], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)

]

학습(Training)

img: 입력 이미지 크기 정의batch: 배치 크기 결정epochs: 학습 기간 개수 정의data: yaml 파일 경로cfg: 모델 구성 지정weights: 가중치에 대한 경로 지정name: 결과 이름nosave: 최종 체크포인트만 저장cache: 빠른 학습을 위한 이미지 캐시

In [14]:

!pwd

Out [14]:

/content/yolov5/pothole

In [15]:

%cd /content/yolov5/

Out [15]:

/content/yolov5

In [16]:

%%time

!python train.py --img 640 --batch 32 --epochs 100 --data ./pothole/data.yaml \

--cfg ./models/custom_yolov5s.yaml --name pothole_result --cache

Out [16]:

train: weights=yolov5s.pt, cfg=./models/custom_yolov5s.yaml, data=./pothole/data.yaml, hyp=data/hyps/hyp.scratch-low.yaml, epochs=100, batch_size=32, imgsz=640, rect=False, resume=False, nosave=False, noval=False, noautoanchor=False, noplots=False, evolve=None, bucket=, cache=ram, image_weights=False, device=, multi_scale=False, single_cls=False, optimizer=SGD, sync_bn=False, workers=8, project=runs/train, name=pothole_result, exist_ok=False, quad=False, cos_lr=False, label_smoothing=0.0, patience=100, freeze=[0], save_period=-1, seed=0, local_rank=-1, entity=None, upload_dataset=False, bbox_interval=-1, artifact_alias=latest

github: up to date with https://github.com/ultralytics/yolov5 ✅

YOLOv5 🚀 v7.0-37-gb2f94e8 Python-3.8.16 torch-1.13.0+cu116 CUDA:0 (Tesla T4, 15110MiB)

hyperparameters: lr0=0.01, lrf=0.01, momentum=0.937, weight_decay=0.0005, warmup_epochs=3.0, warmup_momentum=0.8, warmup_bias_lr=0.1, box=0.05, cls=0.5, cls_pw=1.0, obj=1.0, obj_pw=1.0, iou_t=0.2, anchor_t=4.0, fl_gamma=0.0, hsv_h=0.015, hsv_s=0.7, hsv_v=0.4, degrees=0.0, translate=0.1, scale=0.5, shear=0.0, perspective=0.0, flipud=0.0, fliplr=0.5, mosaic=1.0, mixup=0.0, copy_paste=0.0

ClearML: run 'pip install clearml' to automatically track, visualize and remotely train YOLOv5 🚀 in ClearML

Comet: run 'pip install comet_ml' to automatically track and visualize YOLOv5 🚀 runs in Comet

TensorBoard: Start with 'tensorboard --logdir runs/train', view at http://localhost:6006/

Downloading https://ultralytics.com/assets/Arial.ttf to /root/.config/Ultralytics/Arial.ttf...

100% 755k/755k [00:00<00:00, 157MB/s]

Downloading https://github.com/ultralytics/yolov5/releases/download/v7.0/yolov5s.pt to yolov5s.pt...

100% 14.1M/14.1M [00:00<00:00, 324MB/s]

from n params module arguments

0 -1 1 3520 models.common.Conv [3, 32, 6, 2, 2]

1 -1 1 18560 models.common.Conv [32, 64, 3, 2]

2 -1 1 18816 models.common.C3 [64, 64, 1]

3 -1 1 73984 models.common.Conv [64, 128, 3, 2]

4 -1 2 115712 models.common.C3 [128, 128, 2]

5 -1 1 295424 models.common.Conv [128, 256, 3, 2]

6 -1 3 625152 models.common.C3 [256, 256, 3]

7 -1 1 1180672 models.common.Conv [256, 512, 3, 2]

8 -1 1 1182720 models.common.C3 [512, 512, 1]

9 -1 1 656896 models.common.SPPF [512, 512, 5]

10 -1 1 131584 models.common.Conv [512, 256, 1, 1]

11 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

12 [-1, 6] 1 0 models.common.Concat [1]

13 -1 1 361984 models.common.C3 [512, 256, 1, False]

14 -1 1 33024 models.common.Conv [256, 128, 1, 1]

15 -1 1 0 torch.nn.modules.upsampling.Upsample [None, 2, 'nearest']

16 [-1, 4] 1 0 models.common.Concat [1]

17 -1 1 90880 models.common.C3 [256, 128, 1, False]

18 -1 1 147712 models.common.Conv [128, 128, 3, 2]

19 [-1, 14] 1 0 models.common.Concat [1]

20 -1 1 296448 models.common.C3 [256, 256, 1, False]

21 -1 1 590336 models.common.Conv [256, 256, 3, 2]

22 [-1, 10] 1 0 models.common.Concat [1]

23 -1 1 1182720 models.common.C3 [512, 512, 1, False]

24 [17, 20, 23] 1 16182 models.yolo.Detect [1, [[10, 13, 16, 30, 33, 23], [30, 61, 62, 45, 59, 119], [116, 90, 156, 198, 373, 326]], [128, 256, 512]]

custom_YOLOv5s summary: 214 layers, 7022326 parameters, 7022326 gradients, 15.9 GFLOPs

Transferred 342/349 items from yolov5s.pt

AMP: checks passed ✅

optimizer: SGD(lr=0.01) with parameter groups 57 weight(decay=0.0), 60 weight(decay=0.0005), 60 bias

albumentations: Blur(p=0.01, blur_limit=(3, 7)), MedianBlur(p=0.01, blur_limit=(3, 7)), ToGray(p=0.01), CLAHE(p=0.01, clip_limit=(1, 4.0), tile_grid_size=(8, 8))

train: Scanning /content/yolov5/pothole/train/labels... 465 images, 0 backgrounds, 0 corrupt: 100% 465/465 [00:00<00:00, 1802.22it/s]

train: New cache created: /content/yolov5/pothole/train/labels.cache

train: Caching images (0.4GB ram): 100% 465/465 [00:02<00:00, 186.01it/s]

val: Scanning /content/yolov5/pothole/valid/labels... 133 images, 0 backgrounds, 0 corrupt: 100% 133/133 [00:00<00:00, 691.88it/s]

val: New cache created: /content/yolov5/pothole/valid/labels.cache

val: Caching images (0.1GB ram): 100% 133/133 [00:01<00:00, 70.82it/s]

AutoAnchor: 4.30 anchors/target, 0.999 Best Possible Recall (BPR). Current anchors are a good fit to dataset ✅

Plotting labels to runs/train/pothole_result/labels.jpg...

Image sizes 640 train, 640 val

Using 2 dataloader workers

Logging results to runs/train/pothole_result

Starting training for 100 epochs...

Epoch GPU_mem box_loss obj_loss cls_loss Instances Size

0/99 7.32G 0.1145 0.03855 0 112 640: 100% 15/15 [00:09<00:00, 1.55it/s]

Class Images Instances P R mAP50 mAP50-95: 100% 3/3 [00:03<00:00, 1.13s/it]

all 133 330 0.00405 0.406 0.00702 0.00159

Epoch GPU_mem box_loss obj_loss cls_loss Instances Size

1/99 9.67G 0.09401 0.04007 0 80 640: 100% 15/15 [00:06<00:00, 2.39it/s]

Class Images Instances P R mAP50 mAP50-95: 100% 3/3 [00:01<00:00, 1.88it/s]

all 133 330 0.19 0.13 0.0611 0.0167

...

Epoch GPU_mem box_loss obj_loss cls_loss Instances Size

98/99 9.67G 0.01908 0.01506 0 105 640: 100% 15/15 [00:05<00:00, 2.59it/s]

Class Images Instances P R mAP50 mAP50-95: 100% 3/3 [00:01<00:00, 2.19it/s]

all 133 330 0.775 0.724 0.76 0.483

Epoch GPU_mem box_loss obj_loss cls_loss Instances Size

99/99 9.67G 0.01938 0.01471 0 71 640: 100% 15/15 [00:05<00:00, 2.59it/s]

Class Images Instances P R mAP50 mAP50-95: 100% 3/3 [00:01<00:00, 2.10it/s]

all 133 330 0.792 0.713 0.764 0.488

100 epochs completed in 0.216 hours.

Optimizer stripped from runs/train/pothole_result/weights/last.pt, 14.4MB

Optimizer stripped from runs/train/pothole_result/weights/best.pt, 14.4MB

Validating runs/train/pothole_result/weights/best.pt...

Fusing layers...

custom_YOLOv5s summary: 157 layers, 7012822 parameters, 0 gradients, 15.8 GFLOPs

Class Images Instances P R mAP50 mAP50-95: 100% 3/3 [00:01<00:00, 1.52it/s]

all 133 330 0.767 0.708 0.759 0.488

Results saved to runs/train/pothole_result

CPU times: user 8.61 s, sys: 997 ms, total: 9.61 s

Wall time: 13min 46s

In [17]:

%load_ext tensorboard

%tensorboard --logdir runs

Out [17]:

<IPython.core.display.Javascript object>

In [18]:

!ls /content/yolov5/runs/train/pothole_result

Out [18]:

confusion_matrix.png results.png

events.out.tfevents.1671278074.2b026896312d.162.0 train_batch0.jpg

F1_curve.png train_batch1.jpg

hyp.yaml train_batch2.jpg

labels_correlogram.jpg val_batch0_labels.jpg

labels.jpg val_batch0_pred.jpg

opt.yaml val_batch1_labels.jpg

P_curve.png val_batch1_pred.jpg

PR_curve.png val_batch2_labels.jpg

R_curve.png val_batch2_pred.jpg

results.csv weights

In [19]:

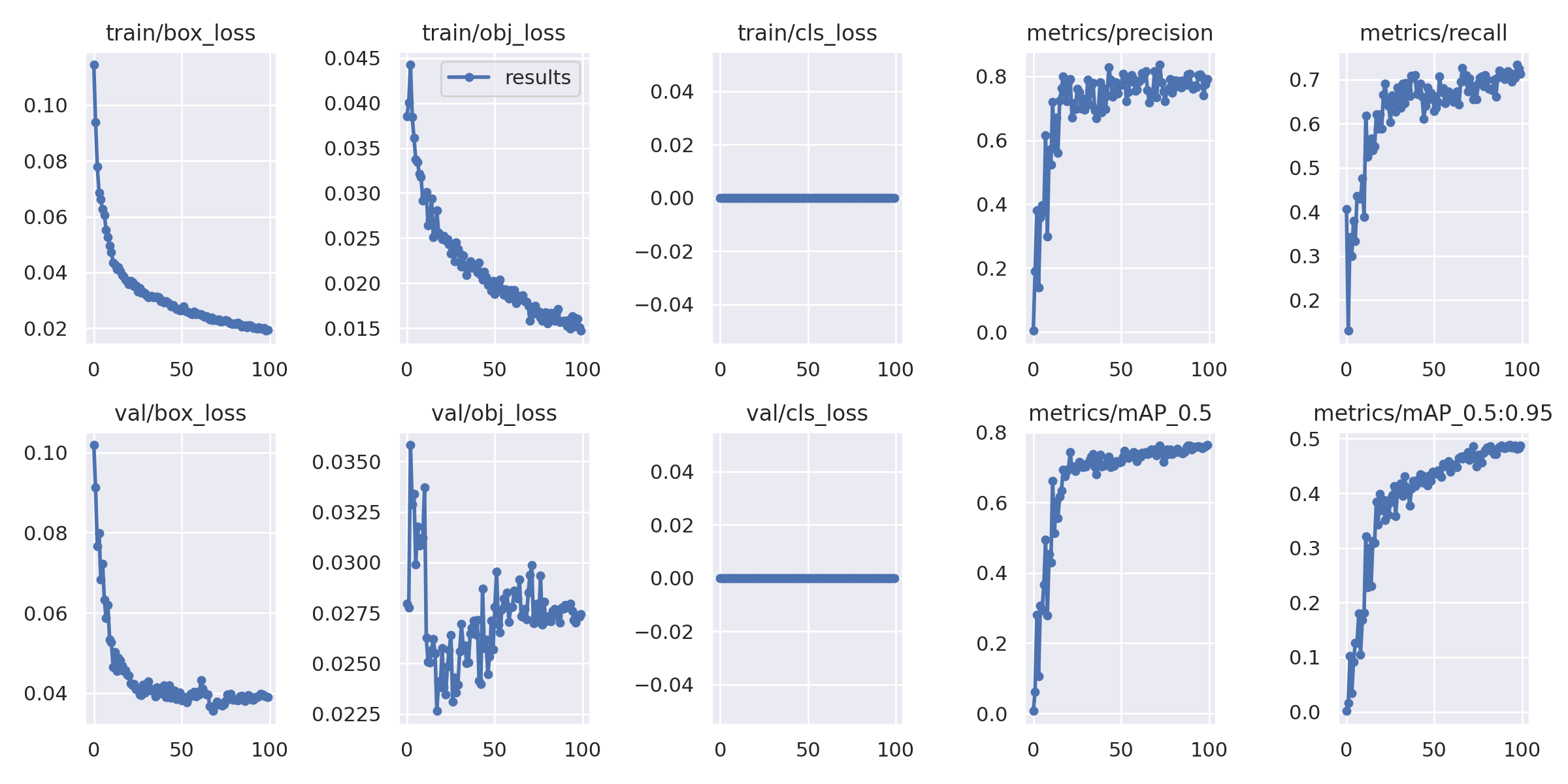

from IPython.display import Image

Image(filename='/content/yolov5/runs/train/pothole_result/results.png', width=800)

Out [19]:

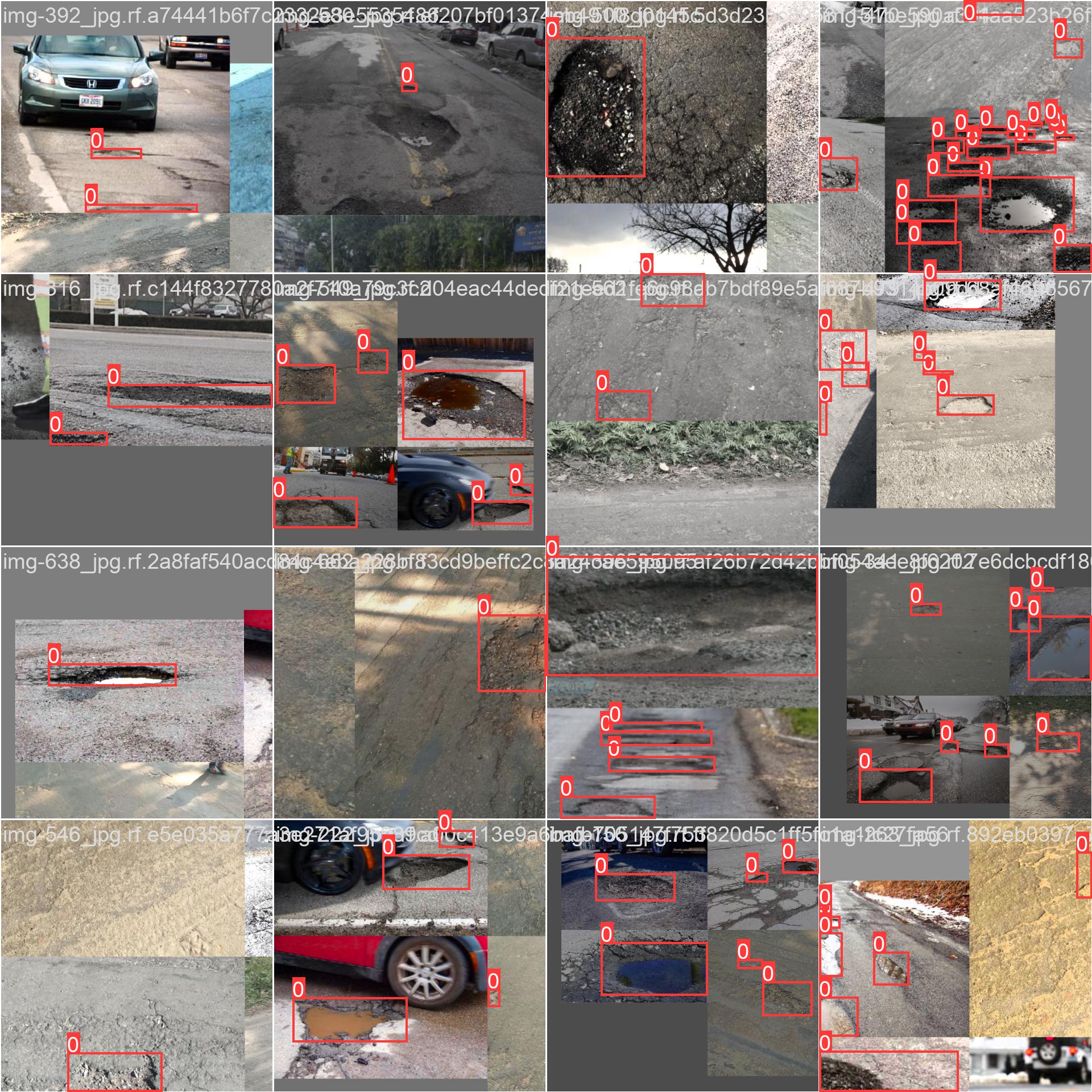

In [20]:

Image(filename='/content/yolov5/runs/train/pothole_result/train_batch0.jpg', width=800)

Out [20]:

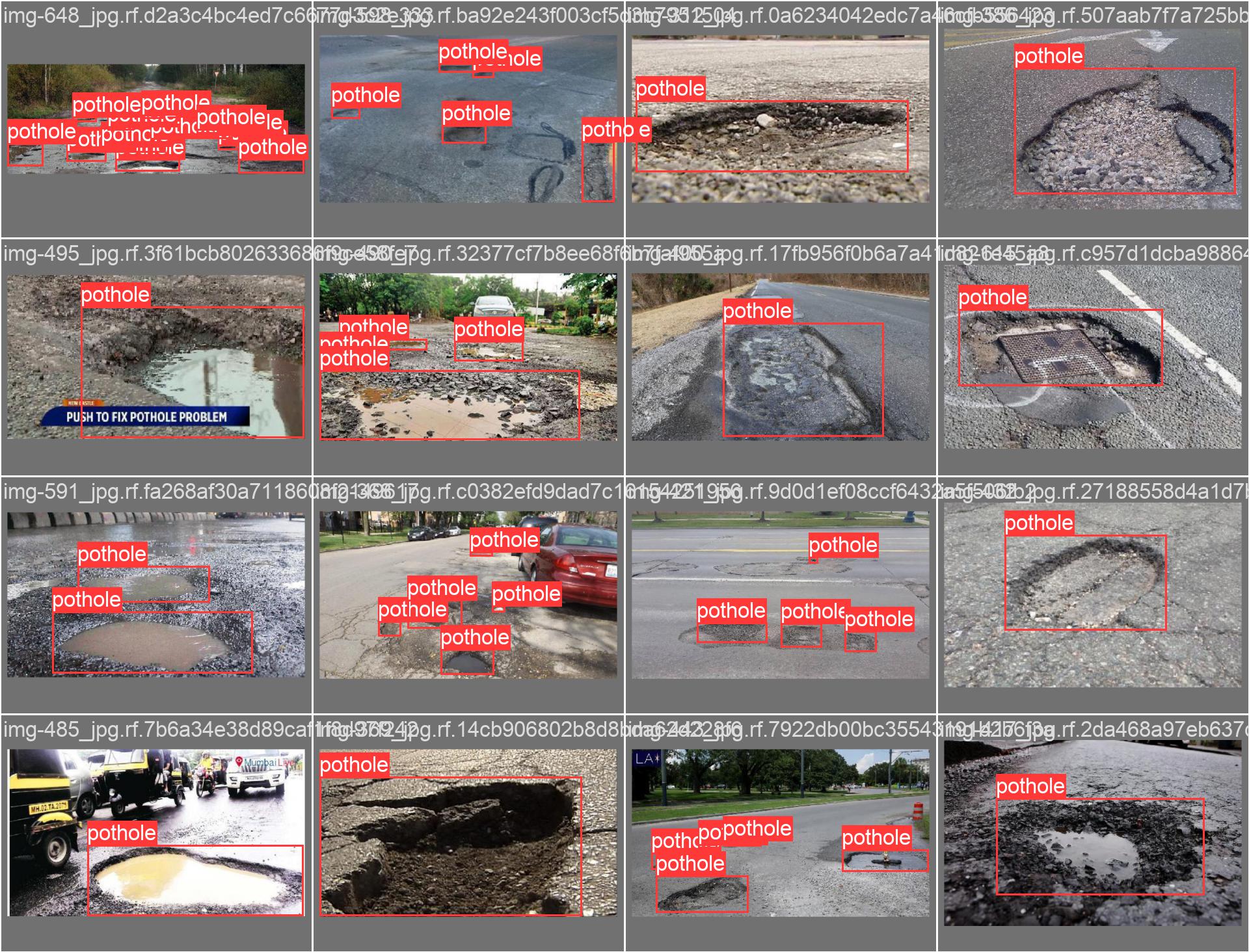

In [21]:

Image(filename='/content/yolov5/runs/train/pothole_result/val_batch0_labels.jpg', width=800)

Out [21]:

검증(Validation)

In [22]:

# validation data

!python val.py --weights runs/train/pothole_result/weights/best.pt \

--data ./pothole/data.yaml --img 640 --iou 0.65 --half

Out [22]:

val: data=./pothole/data.yaml, weights=['runs/train/pothole_result/weights/best.pt'], batch_size=32, imgsz=640, conf_thres=0.001, iou_thres=0.65, max_det=300, task=val, device=, workers=8, single_cls=False, augment=False, verbose=False, save_txt=False, save_hybrid=False, save_conf=False, save_json=False, project=runs/val, name=exp, exist_ok=False, half=True, dnn=False

YOLOv5 🚀 v7.0-37-gb2f94e8 Python-3.8.16 torch-1.13.0+cu116 CUDA:0 (Tesla T4, 15110MiB)

Fusing layers...

custom_YOLOv5s summary: 157 layers, 7012822 parameters, 0 gradients, 15.8 GFLOPs

val: Scanning /content/yolov5/pothole/valid/labels.cache... 133 images, 0 backgrounds, 0 corrupt: 100% 133/133 [00:00<?, ?it/s]

Class Images Instances P R mAP50 mAP50-95: 100% 5/5 [00:04<00:00, 1.18it/s]

all 133 330 0.757 0.718 0.755 0.484

Speed: 2.2ms pre-process, 7.5ms inference, 2.4ms NMS per image at shape (32, 3, 640, 640)

Results saved to runs/val/exp

In [23]:

# test data

!python val.py --weights runs/train/pothole_result/weights/best.pt \

--data ./pothole/data.yaml --img 640 --task test

Out [23]:

val: data=./pothole/data.yaml, weights=['runs/train/pothole_result/weights/best.pt'], batch_size=32, imgsz=640, conf_thres=0.001, iou_thres=0.6, max_det=300, task=test, device=, workers=8, single_cls=False, augment=False, verbose=False, save_txt=False, save_hybrid=False, save_conf=False, save_json=False, project=runs/val, name=exp, exist_ok=False, half=False, dnn=False

YOLOv5 🚀 v7.0-37-gb2f94e8 Python-3.8.16 torch-1.13.0+cu116 CUDA:0 (Tesla T4, 15110MiB)

Fusing layers...

custom_YOLOv5s summary: 157 layers, 7012822 parameters, 0 gradients, 15.8 GFLOPs

test: Scanning /content/yolov5/pothole/test/labels... 67 images, 0 backgrounds, 0 corrupt: 100% 67/67 [00:00<00:00, 509.49it/s]

test: New cache created: /content/yolov5/pothole/test/labels.cache

Class Images Instances P R mAP50 mAP50-95: 100% 3/3 [00:02<00:00, 1.24it/s]

all 67 154 0.825 0.779 0.822 0.524

Speed: 0.2ms pre-process, 8.8ms inference, 3.4ms NMS per image at shape (32, 3, 640, 640)

Results saved to runs/val/exp2

추론(Inference)

In [24]:

%ls runs/train/pothole_result/weights/

Out [24]:

best.pt last.pt

In [25]:

!python detect.py --weights runs/train/pothole_result/weights/best.pt \

--img 640 --conf 0.4 --source ./pothole/test/images

Out [25]:

detect: weights=['runs/train/pothole_result/weights/best.pt'], source=./pothole/test/images, data=data/coco128.yaml, imgsz=[640, 640], conf_thres=0.4, iou_thres=0.45, max_det=1000, device=, view_img=False, save_txt=False, save_conf=False, save_crop=False, nosave=False, classes=None, agnostic_nms=False, augment=False, visualize=False, update=False, project=runs/detect, name=exp, exist_ok=False, line_thickness=3, hide_labels=False, hide_conf=False, half=False, dnn=False, vid_stride=1

YOLOv5 🚀 v7.0-37-gb2f94e8 Python-3.8.16 torch-1.13.0+cu116 CUDA:0 (Tesla T4, 15110MiB)

Fusing layers...

custom_YOLOv5s summary: 157 layers, 7012822 parameters, 0 gradients, 15.8 GFLOPs

image 1/67 /content/yolov5/pothole/test/images/img-105_jpg.rf.3fe9dff3d1631e79ecb480ff403bcb86.jpg: 640x640 1 pothole, 12.3ms

image 2/67 /content/yolov5/pothole/test/images/img-107_jpg.rf.2e40485785f6e5e2efec404301b235c2.jpg: 640x640 2 potholes, 12.4ms

...

image 66/67 /content/yolov5/pothole/test/images/img-82_jpg.rf.851a545b1b004e7303844e4779d3272c.jpg: 640x640 2 potholes, 11.2ms

image 67/67 /content/yolov5/pothole/test/images/img-98_jpg.rf.667209472947ff4d519f65c6e206a7c3.jpg: 640x640 2 potholes, 9.6ms

Speed: 0.4ms pre-process, 10.1ms inference, 0.8ms NMS per image at shape (1, 3, 640, 640)

Results saved to runs/detect/exp

In [26]:

import random

image_name = random.choice(glob('runs/detect/exp/*.jpg'))

display(Image(filename=image_name))

Out [26]:

모델 내보내기

In [27]:

from google.colab import drive

drive.mount('/content/drive')

Out [27]:

Mounted at /content/drive

In [28]:

%cp /content/yolov5/runs/train/pothole_result/weights/best.pt '/content/drive/MyDrive/Colab Notebooks/deep_learning/model/18_yolov5_pothole'

Reference

- 이 포스트는 SeSAC 인공지능 자연어처리, 컴퓨터비전 기술을 활용한 응용 SW 개발자 양성 과정 - 심선조 강사님의 강의를 정리한 내용입니다.

댓글남기기