Deep Learning (19) - Image Segmentation / Detectron2

Detectron2

- 페이스북 인공지능 연구소(FAIR)에서 개발한 객체 세그멘테이션 프레임워크

- 페이스북에서 개발한 DensePose, Mask R-CNN 등을 Detectron2에서 제공

- 손쉽게 다양한 사물들을 탐지하고 세그먼테이션하여, 객체의 유형, 크기, 위치 등을 자동으로 얻을 수 있음

Detectron2 설치

- Tutorial: https://detectron2.readthedocs.io/tutorials/install.html

- Detectron2: https://dl.fbaipublicfiles.com/detectron2/wheels/cu102/torch1.9/index.html

In [1]:

!pip install pyyaml==5.3.1

!pip install torch==1.9.0+cu102 torchvision==0.10.0+cu102 -f https://download.pytorch.org/whl/torch_stable.html

!pip install detectron2 -f https://dl.fbaipublicfiles.com/detectron2/wheels/cu102/torch1.9/index.html

Out [1]:

Looking in indexes: https://pypi.org/simple, https://us-python.pkg.dev/colab-wheels/public/simple/

Collecting pyyaml==5.3.1

Downloading PyYAML-5.3.1.tar.gz (269 kB)

...

Installing collected packages: portalocker, antlr4-python3-runtime, yacs, pathspec, omegaconf, mypy-extensions, iopath, hydra-core, fvcore, black, detectron2

Successfully installed antlr4-python3-runtime-4.9.3 black-21.4b2 detectron2-0.6+cu102 fvcore-0.1.5.post20221220 hydra-core-1.3.0 iopath-0.1.9 mypy-extensions-0.4.3 omegaconf-2.3.0 pathspec-0.10.3 portalocker-2.6.0 yacs-0.1.8

In [2]:

import torch, torchvision

print(torch.__version__, torch.cuda.is_available())

Out [2]:

1.9.0+cu102 True

In [3]:

import detectron2

from detectron2.utils.logger import setup_logger

setup_logger()

import numpy as np

import os, json, cv2, random

from google.colab.patches import cv2_imshow

from detectron2 import model_zoo

from detectron2.engine import DefaultPredictor

from detectron2.config import get_cfg

from detectron2.utils.visualizer import Visualizer

from detectron2.data import MetadataCatalog, DatasetCatalog

사전 모델

- input.jpg: http://images.cocodataset.org/val2017/000000439715.jpg

In [4]:

!wget http://images.cocodataset.org/val2017/000000439715.jpg -q -O input.jpg

im = cv2.imread('./input.jpg')

cv2_imshow(im)

Out [4]:

- Config 파일: COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x.yaml

In [5]:

model_zoo.get_checkpoint_url('COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x.yaml')

Out [5]:

'https://dl.fbaipublicfiles.com/detectron2/COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x/137849600/model_final_f10217.pkl'

In [6]:

cfg = get_cfg()

cfg.merge_from_file(model_zoo.get_config_file('COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x.yaml')) # ResNet CNN으로 학습된 파일

cfg.MODEL.ROI_HEADS.SCORE_THRESH_TEST = 0.5

# cfg.MODEL.WEIGHTS = model_zoo.get_checkpoint_url('COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x.yaml')

# NotImplementedError

cfg.MODEL.WEIGHTS = '/content/drive/MyDrive/Colab Notebooks/deep_learning/model/COCO-InstanceSegmentation mask_rcnn_R_50_FPN_3x /model_final_f10217.pkl'

predictor = DefaultPredictor(cfg)

outputs = predictor(im)

Out [6]:

/usr/local/lib/python3.8/dist-packages/torch/_tensor.py:575: UserWarning: floor_divide is deprecated, and will be removed in a future version of pytorch. It currently rounds toward 0 (like the 'trunc' function NOT 'floor'). This results in incorrect rounding for negative values.

To keep the current behavior, use torch.div(a, b, rounding_mode='trunc'), or for actual floor division, use torch.div(a, b, rounding_mode='floor'). (Triggered internally at /pytorch/aten/src/ATen/native/BinaryOps.cpp:467.)

return torch.floor_divide(self, other)

/usr/local/lib/python3.8/dist-packages/torch/nn/functional.py:718: UserWarning: Named tensors and all their associated APIs are an experimental feature and subject to change. Please do not use them for anything important until they are released as stable. (Triggered internally at /pytorch/c10/core/TensorImpl.h:1156.)

return torch.max_pool2d(input, kernel_size, stride, padding, dilation, ceil_mode)

In [7]:

print(outputs['instances'].pred_classes)

print(outputs['instances'].pred_boxes)

Out [7]:

tensor([17, 0, 0, 0, 0, 0, 0, 0, 25, 0, 25, 25, 0, 0, 24],

device='cuda:0')

Boxes(tensor([[126.6035, 244.8977, 459.8291, 480.0000],

[251.1083, 157.8127, 338.9731, 413.6379],

[114.8496, 268.6864, 148.2352, 398.8111],

[ 0.8217, 281.0327, 78.6072, 478.4210],

[ 49.3954, 274.1229, 80.1545, 342.9808],

[561.2248, 271.5816, 596.2755, 385.2552],

[385.9072, 270.3125, 413.7130, 304.0397],

[515.9295, 278.3744, 562.2792, 389.3802],

[335.2409, 251.9167, 414.7491, 275.9375],

[350.9300, 269.2060, 386.0984, 297.9081],

[331.6292, 230.9996, 393.2759, 257.2009],

[510.7349, 263.2656, 570.9865, 295.9194],

[409.0841, 271.8646, 460.5582, 356.8722],

[506.8767, 283.3257, 529.9403, 324.0392],

[594.5663, 283.4820, 609.0577, 311.4124]], device='cuda:0'))

In [8]:

v = Visualizer(im[:, :, ::-1], MetadataCatalog.get(cfg.DATASETS.TRAIN[0]), scale=1.2) # opencv에서는 bgr값을 사용

out = v.draw_instance_predictions(outputs['instances'].to('cpu'))

cv2_imshow(out.get_image()[:, :, ::-1]) # rgb로 변환

Out [8]:

커스텀 데이터셋 학습

데이터셋 준비

- Balloon 데이터셋: https://github.com/matterport/Mask_RCNN/releases/download/v2.1/balloon_dataset.zip

In [9]:

!wget https://github.com/matterport/Mask_RCNN/releases/download/v2.1/balloon_dataset.zip

!unzip balloon_dataset.zip

Out [9]:

--2022-12-20 15:21:55-- https://github.com/matterport/Mask_RCNN/releases/download/v2.1/balloon_dataset.zip

Resolving github.com (github.com)... 140.82.112.4

Connecting to github.com (github.com)|140.82.112.4|:443... connected.

HTTP request sent, awaiting response... 302 Found

Location: https://objects.githubusercontent.com/github-production-release-asset-2e65be/107595270/737339e2-2b83-11e8-856a-188034eb3468?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIAIWNJYAX4CSVEH53A%2F20221220%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20221220T152155Z&X-Amz-Expires=300&X-Amz-Signature=23022445664f266436191a599d43629f6b6720eed764569b5ccfd723ac1be44f&X-Amz-SignedHeaders=host&actor_id=0&key_id=0&repo_id=107595270&response-content-disposition=attachment%3B%20filename%3Dballoon_dataset.zip&response-content-type=application%2Foctet-stream [following]

--2022-12-20 15:21:55-- https://objects.githubusercontent.com/github-production-release-asset-2e65be/107595270/737339e2-2b83-11e8-856a-188034eb3468?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIAIWNJYAX4CSVEH53A%2F20221220%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20221220T152155Z&X-Amz-Expires=300&X-Amz-Signature=23022445664f266436191a599d43629f6b6720eed764569b5ccfd723ac1be44f&X-Amz-SignedHeaders=host&actor_id=0&key_id=0&repo_id=107595270&response-content-disposition=attachment%3B%20filename%3Dballoon_dataset.zip&response-content-type=application%2Foctet-stream

Resolving objects.githubusercontent.com (objects.githubusercontent.com)... 185.199.108.133, 185.199.109.133, 185.199.110.133, ...

Connecting to objects.githubusercontent.com (objects.githubusercontent.com)|185.199.108.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 38741381 (37M) [application/octet-stream]

Saving to: ‘balloon_dataset.zip’

balloon_dataset.zip 100%[===================>] 36.95M 128MB/s in 0.3s

2022-12-20 15:21:55 (128 MB/s) - ‘balloon_dataset.zip’ saved [38741381/38741381]

Archive: balloon_dataset.zip

creating: balloon/

creating: balloon/train/

inflating: balloon/train/via_region_data.json

creating: __MACOSX/

creating: __MACOSX/balloon/

creating: __MACOSX/balloon/train/

inflating: __MACOSX/balloon/train/._via_region_data.json

inflating: balloon/train/53500107_d24b11b3c2_b.jpg

inflating: __MACOSX/balloon/train/._53500107_d24b11b3c2_b.jpg

...

inflating: balloon/val/2917282960_06beee649a_b.jpg

inflating: __MACOSX/balloon/val/._2917282960_06beee649a_b.jpg

In [10]:

from detectron2.structures import BoxMode

# json 데이터 이미지로 가공하는 함수

def get_balloon_dicts(img_dir):

json_file = os.path.join(img_dir, 'via_region_data.json') # json 경로 저장 변수 지정

with open(json_file) as f:

imgs_anns = json.load(f)

dataset_dicts = []

for idx, v in enumerate(imgs_anns.values()):

record = {}

filename = os.path.join(img_dir, v['filename']) # 파일경로+이름

height, width = cv2.imread(filename).shape[:2] # 이미지 크기

record['file_name'] = filename

record['image_id'] = idx

record['height'] = height

record['width'] = width

annos = v['regions']

objs = []

for _, anno in annos.items():

anno = anno['shape_attributes']

px = anno['all_points_x']

py = anno['all_points_y']

poly = [(x+0.5, y+0.5) for x, y in zip(px, py)] # 0.5 값 조정

poly = [p for x in poly for p in x] # x, y 번갈아가며 리스트로

obj = {

'bbox':[np.min(px), np.min(py), np.max(px), np.max(py)],

'bbox_mode':BoxMode.XYXY_ABS,

'segmentation':[poly],

'category_id':0,

}

objs.append(obj)

record['annotations'] = objs

dataset_dicts.append(record)

return dataset_dicts

for d in ['train', 'val']:

DatasetCatalog.register('balloon_'+d, lambda d=d:get_balloon_dicts('balloon/'+d))

MetadataCatalog.get('balloon_'+d).set(thing_classes=['balloon'])

balloon_metadata = MetadataCatalog.get('balloon_train')

In [11]:

dataset_dicts = get_balloon_dicts('balloon/train')

for d in random.sample(dataset_dicts, 2):

img = cv2.imread(d['file_name']) # BGR로 불러옴

v = Visualizer(img, metadata=balloon_metadata, scale=0.5)

out = v.draw_dataset_dict(d)

cv2_imshow(out.get_image())

Out [11]:

학습

- Config 파일: COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x.yaml

In [12]:

from detectron2.engine import DefaultTrainer

cfg = get_cfg()

cfg.merge_from_file(model_zoo.get_config_file('COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x.yaml'))

cfg.DATASETS.TRAIN = ('balloon_train', ) # 튜플

cfg.DATASETS.TEST = ()

cfg.DATALOADER.NUM_WORKER = 2

# cfg.MODEL.WEIGHTS = model_zoo.get_checkpoint_url('COCO-InstanceSegmentation/mask_rcnn_R_50_FPN_3x.yaml')

# NotImplementedError

cfg.MODEL.WEIGHTS = '/content/drive/MyDrive/Colab Notebooks/deep_learning/model/COCO-InstanceSegmentation mask_rcnn_R_50_FPN_3x /model_final_f10217.pkl'

cfg.SOLVER.IMS_PER_BATCH = 2

cfg.SOLVER.BASE_LR = 0.00025 # learning rate

cfg.SOLVER.MAX_ITER = 300

cfg.SOLVER.STEPS = []

cfg.MODEL.ROI_HEADS.BATCH_SIZE_PER_IMAGE = 128# batch_size

cfg.MODEL.ROI_HEADS.NUM_CLASSES = 1

os.makedirs(cfg.OUTPUT_DIR, exist_ok=True)

trainer = DefaultTrainer(cfg)

trainer.resume_or_load(resume=False)

trainer.train()

Out [12]:

[12/20 15:21:58 d2.engine.defaults]: Model:

GeneralizedRCNN(

(backbone): FPN(

(fpn_lateral2): Conv2d(256, 256, kernel_size=(1, 1), stride=(1, 1))

(fpn_output2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(fpn_lateral3): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1))

(fpn_output3): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(fpn_lateral4): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1))

(fpn_output4): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(fpn_lateral5): Conv2d(2048, 256, kernel_size=(1, 1), stride=(1, 1))

(fpn_output5): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(top_block): LastLevelMaxPool()

(bottom_up): ResNet(

(stem): BasicStem(

(conv1): Conv2d(

3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False

(norm): FrozenBatchNorm2d(num_features=64, eps=1e-05)

)

)

...

(mask_head): MaskRCNNConvUpsampleHead(

(mask_fcn1): Conv2d(

256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)

(activation): ReLU()

)

(mask_fcn2): Conv2d(

256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)

(activation): ReLU()

)

(mask_fcn3): Conv2d(

256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)

(activation): ReLU()

)

(mask_fcn4): Conv2d(

256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1)

(activation): ReLU()

)

(deconv): ConvTranspose2d(256, 256, kernel_size=(2, 2), stride=(2, 2))

(deconv_relu): ReLU()

(predictor): Conv2d(256, 1, kernel_size=(1, 1), stride=(1, 1))

)

)

)

[12/20 15:22:00 d2.data.build]: Removed 0 images with no usable annotations. 61 images left.

[12/20 15:22:00 d2.data.build]: Distribution of instances among all 1 categories:

| category | #instances |

|:----------:|:-------------|

| balloon | 255 |

| | |

[12/20 15:22:00 d2.data.dataset_mapper]: [DatasetMapper] Augmentations used in training: [ResizeShortestEdge(short_edge_length=(640, 672, 704, 736, 768, 800), max_size=1333, sample_style='choice'), RandomFlip()]

[12/20 15:22:00 d2.data.build]: Using training sampler TrainingSampler

[12/20 15:22:00 d2.data.common]: Serializing 61 elements to byte tensors and concatenating them all ...

[12/20 15:22:00 d2.data.common]: Serialized dataset takes 0.17 MiB

/usr/local/lib/python3.8/dist-packages/torch/utils/data/dataloader.py:478: UserWarning: This DataLoader will create 4 worker processes in total. Our suggested max number of worker in current system is 2, which is smaller than what this DataLoader is going to create. Please be aware that excessive worker creation might get DataLoader running slow or even freeze, lower the worker number to avoid potential slowness/freeze if necessary.

warnings.warn(_create_warning_msg(

WARNING:fvcore.common.checkpoint:Skip loading parameter 'roi_heads.box_predictor.cls_score.weight' to the model due to incompatible shapes: (81, 1024) in the checkpoint but (2, 1024) in the model! You might want to double check if this is expected.

WARNING:fvcore.common.checkpoint:Skip loading parameter 'roi_heads.box_predictor.cls_score.bias' to the model due to incompatible shapes: (81,) in the checkpoint but (2,) in the model! You might want to double check if this is expected.

WARNING:fvcore.common.checkpoint:Skip loading parameter 'roi_heads.box_predictor.bbox_pred.weight' to the model due to incompatible shapes: (320, 1024) in the checkpoint but (4, 1024) in the model! You might want to double check if this is expected.

WARNING:fvcore.common.checkpoint:Skip loading parameter 'roi_heads.box_predictor.bbox_pred.bias' to the model due to incompatible shapes: (320,) in the checkpoint but (4,) in the model! You might want to double check if this is expected.

WARNING:fvcore.common.checkpoint:Skip loading parameter 'roi_heads.mask_head.predictor.weight' to the model due to incompatible shapes: (80, 256, 1, 1) in the checkpoint but (1, 256, 1, 1) in the model! You might want to double check if this is expected.

WARNING:fvcore.common.checkpoint:Skip loading parameter 'roi_heads.mask_head.predictor.bias' to the model due to incompatible shapes: (80,) in the checkpoint but (1,) in the model! You might want to double check if this is expected.

WARNING:fvcore.common.checkpoint:Some model parameters or buffers are not found in the checkpoint:

roi_heads.box_predictor.bbox_pred.{bias, weight}

roi_heads.box_predictor.cls_score.{bias, weight}

roi_heads.mask_head.predictor.{bias, weight}

[12/20 15:22:04 d2.engine.train_loop]: Starting training from iteration 0

/usr/local/lib/python3.8/dist-packages/torch/utils/data/dataloader.py:478: UserWarning: This DataLoader will create 4 worker processes in total. Our suggested max number of worker in current system is 2, which is smaller than what this DataLoader is going to create. Please be aware that excessive worker creation might get DataLoader running slow or even freeze, lower the worker number to avoid potential slowness/freeze if necessary.

warnings.warn(_create_warning_msg(

[12/20 15:22:14 d2.utils.events]: eta: 0:02:14 iter: 19 total_loss: 2.14 loss_cls: 0.6942 loss_box_reg: 0.6127 loss_mask: 0.6815 loss_rpn_cls: 0.04308 loss_rpn_loc: 0.008735 time: 0.4683 data_time: 0.0371 lr: 1.6068e-05 max_mem: 2722M

[12/20 15:22:23 d2.utils.events]: eta: 0:02:00 iter: 39 total_loss: 1.798 loss_cls: 0.5861 loss_box_reg: 0.5088 loss_mask: 0.6053 loss_rpn_cls: 0.02803 loss_rpn_loc: 0.005845 time: 0.4569 data_time: 0.0119 lr: 3.2718e-05 max_mem: 2722M

...

[12/20 15:24:15 d2.utils.events]: eta: 0:00:09 iter: 279 total_loss: 0.381 loss_cls: 0.08459 loss_box_reg: 0.1678 loss_mask: 0.08661 loss_rpn_cls: 0.01101 loss_rpn_loc: 0.009393 time: 0.4664 data_time: 0.0110 lr: 0.00023252 max_mem: 2933M

[12/20 15:24:25 d2.utils.events]: eta: 0:00:00 iter: 299 total_loss: 0.2812 loss_cls: 0.06795 loss_box_reg: 0.1426 loss_mask: 0.06991 loss_rpn_cls: 0.008804 loss_rpn_loc: 0.006872 time: 0.4654 data_time: 0.0112 lr: 0.00024917 max_mem: 2933M

[12/20 15:24:25 d2.engine.hooks]: Overall training speed: 298 iterations in 0:02:18 (0.4655 s / it)

[12/20 15:24:25 d2.engine.hooks]: Total training time: 0:02:19 (0:00:00 on hooks)

In [13]:

%load_ext tensorboard

%tensorboard --logdir output

Out [13]:

<IPython.core.display.Javascript object>

추론 및 평가

In [14]:

cfg.MODEL.WEIGHTS = os.path.join(cfg.OUTPUT_DIR, 'model_final.pth')

cfg.MODEL.ROI_HEADS.SCORE_THRESH_TEST = 0.7

predictor = DefaultPredictor(cfg)

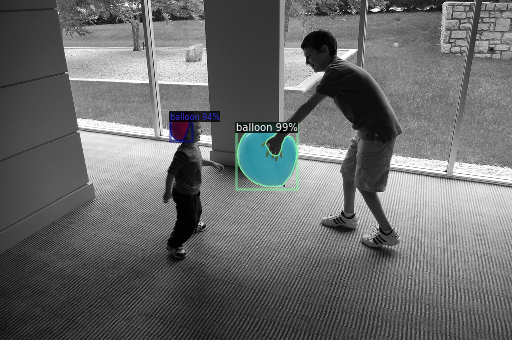

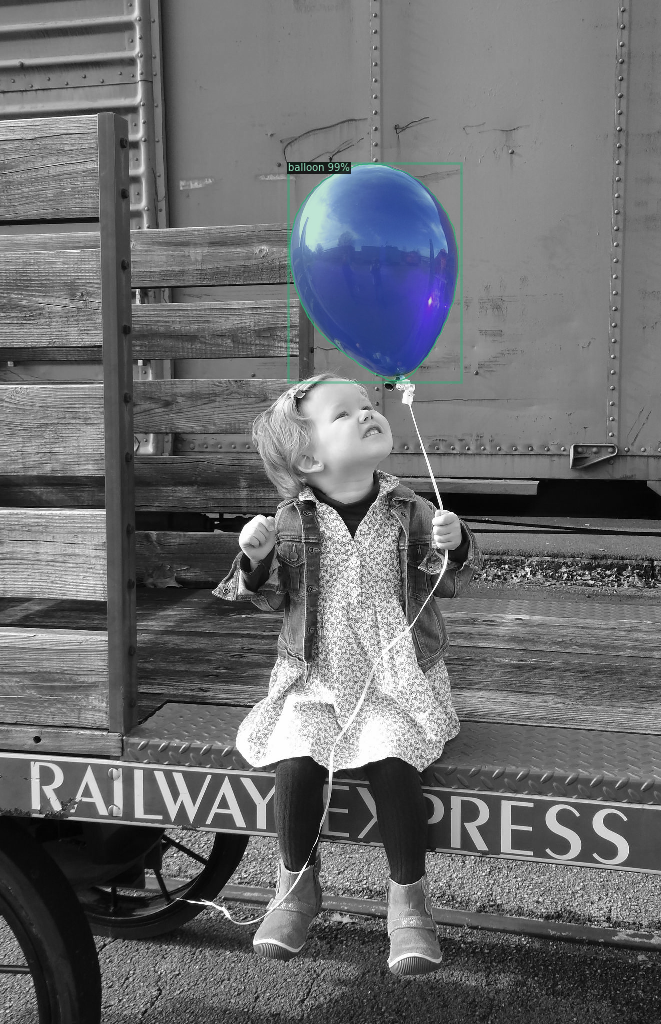

In [15]:

from detectron2.utils.visualizer import ColorMode

dataset_dicts = get_balloon_dicts('balloon/val')

for d in random.sample(dataset_dicts, 3):

img = cv2.imread(d['file_name'])

outputs = predictor(img)

v = Visualizer(img, metadata=balloon_metadata, scale=0.5,

instance_mode=ColorMode.IMAGE_BW) # detection 한 것만 원색으로

out = v.draw_instance_predictions(outputs['instances'].to('cpu'))

cv2_imshow(out.get_image())

Out [15]:

다른 타입 적용

- Config 파일: COCO-Keypoints/keypoint_rcnn_R_50_FPN_3x.yaml

In [16]:

cfg = get_cfg()

cfg.merge_from_file(model_zoo.get_config_file('COCO-Keypoints/keypoint_rcnn_R_50_FPN_3x.yaml'))

cfg.MODEL.ROI_HEADS.SCORE_THRESH_TEST = 0.7

# cfg.MODEL.WEIGHTS = model_zoo.get_checkpoint_url('COCO-Keypoints/keypoint_rcnn_R_50_FPN_3x.yaml')

# NotImplementedError

cfg.MODEL.WEIGHTS = '/content/drive/MyDrive/Colab Notebooks/deep_learning/model/COCO-Keypoints keypoint_rcnn_R_50_FPN_3x /model_final_a6e10b.pkl'

predictor = DefaultPredictor(cfg)

outputs = predictor(img)

v = Visualizer(img[:,:,::-1],MetadataCatalog.get(cfg.DATASETS.TRAIN[0]),scale=0.5)

out = v.draw_instance_predictions(outputs['instances'].to('cpu'))

cv2_imshow(out.get_image()[:,:,::-1])

Out [16]:

- Config 파일: COCO-PanopticSegmentation/panoptic_fpn_R_101_3x.yaml

In [17]:

cfg = get_cfg()

cfg.merge_from_file(model_zoo.get_config_file('COCO-PanopticSegmentation/panoptic_fpn_R_101_3x.yaml'))

# cfg.MODEL.WEIGHTS = model_zoo.get_checkpoint_url('COCO-PanopticSegmentation/panoptic_fpn_R_101_3x.yaml')

# NotImplementedError

cfg.MODEL.WEIGHTS = '/content/drive/MyDrive/Colab Notebooks/deep_learning/model/COCO-PanopticSegmentation panoptic_fpn_R_101_3x /model_final_cafdb1.pkl'

predictor = DefaultPredictor(cfg)

panoptic_seg,segments_info = predictor(img)['panoptic_seg']

v = Visualizer(img[:,:,::-1],MetadataCatalog.get(cfg.DATASETS.TRAIN[0]),scale=0.5)

out = v.draw_panoptic_seg_predictions(panoptic_seg.to('cpu'),segments_info)

cv2_imshow(out.get_image()[:,:,::-1])

Out [17]:

비디오 파일 적용

- https://www.youtube.com/watch?v=ll8TgCZ0plk

In [18]:

from IPython.display import YouTubeVideo, display

video = YouTubeVideo('ll8TgCZ0plk',width=600)

display(video)

Out [18]:

In [19]:

!ffmpeg -i '/content/drive/MyDrive/Colab Notebooks/deep_learning/video.mp4' -t 00:00:06 -c:v copy video-clip.mp4

Out [19]:

ffmpeg version 3.4.11-0ubuntu0.1 Copyright (c) 2000-2022 the FFmpeg developers

built with gcc 7 (Ubuntu 7.5.0-3ubuntu1~18.04)

configuration: --prefix=/usr --extra-version=0ubuntu0.1 --toolchain=hardened --libdir=/usr/lib/x86_64-linux-gnu --incdir=/usr/include/x86_64-linux-gnu --enable-gpl --disable-stripping --enable-avresample --enable-avisynth --enable-gnutls --enable-ladspa --enable-libass --enable-libbluray --enable-libbs2b --enable-libcaca --enable-libcdio --enable-libflite --enable-libfontconfig --enable-libfreetype --enable-libfribidi --enable-libgme --enable-libgsm --enable-libmp3lame --enable-libmysofa --enable-libopenjpeg --enable-libopenmpt --enable-libopus --enable-libpulse --enable-librubberband --enable-librsvg --enable-libshine --enable-libsnappy --enable-libsoxr --enable-libspeex --enable-libssh --enable-libtheora --enable-libtwolame --enable-libvorbis --enable-libvpx --enable-libwavpack --enable-libwebp --enable-libx265 --enable-libxml2 --enable-libxvid --enable-libzmq --enable-libzvbi --enable-omx --enable-openal --enable-opengl --enable-sdl2 --enable-libdc1394 --enable-libdrm --enable-libiec61883 --enable-chromaprint --enable-frei0r --enable-libopencv --enable-libx264 --enable-shared

libavutil 55. 78.100 / 55. 78.100

libavcodec 57.107.100 / 57.107.100

libavformat 57. 83.100 / 57. 83.100

libavdevice 57. 10.100 / 57. 10.100

libavfilter 6.107.100 / 6.107.100

libavresample 3. 7. 0 / 3. 7. 0

libswscale 4. 8.100 / 4. 8.100

libswresample 2. 9.100 / 2. 9.100

libpostproc 54. 7.100 / 54. 7.100

Input #0, mov,mp4,m4a,3gp,3g2,mj2, from '/content/drive/MyDrive/Colab Notebooks/deep_learning/video.mp4':

Metadata:

major_brand : mp42

minor_version : 0

compatible_brands: isommp42

creation_time : 2019-02-02T17:19:09.000000Z

Duration: 00:22:33.07, start: 0.000000, bitrate: 2507 kb/s

Stream #0:0(und): Video: h264 (Main) (avc1 / 0x31637661), yuv420p(tv, bt709), 1280x720 [SAR 1:1 DAR 16:9], 2375 kb/s, 29.97 fps, 29.97 tbr, 30k tbn, 59.94 tbc (default)

Metadata:

creation_time : 2019-02-02T17:19:09.000000Z

handler_name : ISO Media file produced by Google Inc. Created on: 02/02/2019.

Stream #0:1(und): Audio: aac (LC) (mp4a / 0x6134706D), 44100 Hz, stereo, fltp, 127 kb/s (default)

Metadata:

creation_time : 2019-02-02T17:19:09.000000Z

handler_name : ISO Media file produced by Google Inc. Created on: 02/02/2019.

Stream mapping:

Stream #0:0 -> #0:0 (copy)

Stream #0:1 -> #0:1 (aac (native) -> aac (native))

Press [q] to stop, [?] for help

Output #0, mp4, to 'video-clip.mp4':

Metadata:

major_brand : mp42

minor_version : 0

compatible_brands: isommp42

encoder : Lavf57.83.100

Stream #0:0(und): Video: h264 (Main) (avc1 / 0x31637661), yuv420p(tv, bt709), 1280x720 [SAR 1:1 DAR 16:9], q=2-31, 2375 kb/s, 29.97 fps, 29.97 tbr, 30k tbn, 30k tbc (default)

Metadata:

creation_time : 2019-02-02T17:19:09.000000Z

handler_name : ISO Media file produced by Google Inc. Created on: 02/02/2019.

Stream #0:1(und): Audio: aac (LC) (mp4a / 0x6134706D), 44100 Hz, stereo, fltp, 128 kb/s (default)

Metadata:

creation_time : 2019-02-02T17:19:09.000000Z

handler_name : ISO Media file produced by Google Inc. Created on: 02/02/2019.

encoder : Lavc57.107.100 aac

frame= 181 fps=0.0 q=-1.0 Lsize= 1917kB time=00:00:06.01 bitrate=2611.7kbits/s speed=28.9x

video:1816kB audio:94kB subtitle:0kB other streams:0kB global headers:0kB muxing overhead: 0.394768%

[aac @ 0x55d261521700] Qavg: 6048.023

- detectron2 github: https://github.com/facebookresearch/detectron2

- detectron2 config file: detectron2/configs/COCO-PanopticSegmentation/panoptic_fpn_R_101_3x.yaml

- model weights: detectron2://COCO-PanopticSegmentation/panoptic_fpn_R_101_3x/139514519/model_final_cafdb1.pkl

In [20]:

!git clone https://github.com/facebookresearch/detectron2

%run detectron2/demo/demo.py \

--config-file detectron2/configs/COCO-PanopticSegmentation/panoptic_fpn_R_101_3x.yaml \

--video-input video-clip.mp4 \

--confidence-threshold 0.6 \

--output video-output.mkv \

--opts MODEL.WEIGHTS '/content/drive/MyDrive/Colab Notebooks/deep_learning/model/COCO-PanopticSegmentation panoptic_fpn_R_101_3x /model_final_cafdb1.pkl'

# --opts MODEL.WEIGHTS detectron2://COCO-PanopticSegmentation/panoptic_fpn_R_101_3x/139514519/model_final_cafdb1.pkl

Out [20]:

Cloning into 'detectron2'...

remote: Enumerating objects: 14678, done.

remote: Counting objects: 100% (94/94), done.

remote: Compressing objects: 100% (68/68), done.

remote: Total 14678 (delta 37), reused 61 (delta 26), pack-reused 14584

Receiving objects: 100% (14678/14678), 6.03 MiB | 5.68 MiB/s, done.

Resolving deltas: 100% (10587/10587), done.

[12/20 15:24:51 detectron2]: Arguments: Namespace(confidence_threshold=0.6, config_file='detectron2/configs/COCO-PanopticSegmentation/panoptic_fpn_R_101_3x.yaml', input=None, opts=['MODEL.WEIGHTS', '/content/drive/MyDrive/Colab Notebooks/deep_learning/model/COCO-PanopticSegmentation panoptic_fpn_R_101_3x /model_final_cafdb1.pkl'], output='video-output.mkv', video_input='video-clip.mp4', webcam=False)

[12/20 15:24:52 fvcore.common.checkpoint]: [Checkpointer] Loading from /content/drive/MyDrive/Colab Notebooks/deep_learning/model/COCO-PanopticSegmentation panoptic_fpn_R_101_3x /model_final_cafdb1.pkl ...

[12/20 15:24:52 fvcore.common.checkpoint]: Reading a file from 'Detectron2 Model Zoo'

100%|██████████| 181/181 [02:47<00:00, 1.08it/s]

In [21]:

from google.colab import files

files.download('video-output.mkv')

Out [21]:

<IPython.core.display.Javascript object>

<IPython.core.display.Javascript object>

Reference

- 이 포스트는 SeSAC 인공지능 자연어처리, 컴퓨터비전 기술을 활용한 응용 SW 개발자 양성 과정 - 심선조 강사님의 강의를 정리한 내용입니다.

댓글남기기