자연어 처리 - 추천 시스템

추천 시스템

코사인 유사도

import pandas as pd

from sklearn.metrics.pairwise import cosine_similarity

from sklearn.feature_extraction.text import TfidfVectorizer

data = pd.read_csv('datasets/movies_metadata.csv', low_memory=False)

data.head(2)

| adult | belongs_to_collection | budget | genres | homepage | id | imdb_id | original_language | original_title | overview | ... | release_date | revenue | runtime | spoken_languages | status | tagline | title | video | vote_average | vote_count | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | False | {'id': 10194, 'name': 'Toy Story Collection', ... | 30000000 | [{'id': 16, 'name': 'Animation'}, {'id': 35, '... | http://toystory.disney.com/toy-story | 862 | tt0114709 | en | Toy Story | Led by Woody, Andy's toys live happily in his ... | ... | 1995-10-30 | 373554033.0 | 81.0 | [{'iso_639_1': 'en', 'name': 'English'}] | Released | NaN | Toy Story | False | 7.7 | 5415.0 |

| 1 | False | NaN | 65000000 | [{'id': 12, 'name': 'Adventure'}, {'id': 14, '... | NaN | 8844 | tt0113497 | en | Jumanji | When siblings Judy and Peter discover an encha... | ... | 1995-12-15 | 262797249.0 | 104.0 | [{'iso_639_1': 'en', 'name': 'English'}, {'iso... | Released | Roll the dice and unleash the excitement! | Jumanji | False | 6.9 | 2413.0 |

2 rows × 24 columns

data.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 45466 entries, 0 to 45465

Data columns (total 24 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 adult 45466 non-null object

1 belongs_to_collection 4494 non-null object

2 budget 45466 non-null object

3 genres 45466 non-null object

4 homepage 7782 non-null object

5 id 45466 non-null object

6 imdb_id 45449 non-null object

7 original_language 45455 non-null object

8 original_title 45466 non-null object

9 overview 44512 non-null object

10 popularity 45461 non-null object

11 poster_path 45080 non-null object

12 production_companies 45463 non-null object

13 production_countries 45463 non-null object

14 release_date 45379 non-null object

15 revenue 45460 non-null float64

16 runtime 45203 non-null float64

17 spoken_languages 45460 non-null object

18 status 45379 non-null object

19 tagline 20412 non-null object

20 title 45460 non-null object

21 video 45460 non-null object

22 vote_average 45460 non-null float64

23 vote_count 45460 non-null float64

dtypes: float64(4), object(20)

memory usage: 8.3+ MB

data[['title', 'overview']]

| title | overview | |

|---|---|---|

| 0 | Toy Story | Led by Woody, Andy's toys live happily in his ... |

| 1 | Jumanji | When siblings Judy and Peter discover an encha... |

| 2 | Grumpier Old Men | A family wedding reignites the ancient feud be... |

| 3 | Waiting to Exhale | Cheated on, mistreated and stepped on, the wom... |

| 4 | Father of the Bride Part II | Just when George Banks has recovered from his ... |

| ... | ... | ... |

| 45461 | Subdue | Rising and falling between a man and woman. |

| 45462 | Century of Birthing | An artist struggles to finish his work while a... |

| 45463 | Betrayal | When one of her hits goes wrong, a professiona... |

| 45464 | Satan Triumphant | In a small town live two brothers, one a minis... |

| 45465 | Queerama | 50 years after decriminalisation of homosexual... |

45466 rows × 2 columns

data['overview'].isnull().sum()

954

data = data[['title', 'overview']]

data.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 45466 entries, 0 to 45465

Data columns (total 2 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 title 45460 non-null object

1 overview 44512 non-null object

dtypes: object(2)

memory usage: 710.5+ KB

data.dropna(inplace=True)

data.info()

<class 'pandas.core.frame.DataFrame'>

Int64Index: 44506 entries, 0 to 45465

Data columns (total 2 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 title 44506 non-null object

1 overview 44506 non-null object

dtypes: object(2)

memory usage: 1.0+ MB

ftidf_vec = TfidfVectorizer(stop_words='english')

tfidf_dtm = ftidf_vec.fit_transform(data['overview'])

tfidf_dtm # 44506개의 리뷰에 대해 75827 단어의 tfidf 희소 행렬

<44506x75827 sparse matrix of type '<class 'numpy.float64'>'

with 1210839 stored elements in Compressed Sparse Row format>

cos_sim_res = cosine_similarity(tfidf_dtm, tfidf_dtm)

# 타이틀을 정수화(index)

title_to_index = dict(zip(data['title'], data.index))

# 'Toy Story': 0, 'Jumanji': 1, 'Grumpier Old Men': 2...

def get_recommendation(title, n):

idx = title_to_index[title]

sim_scores = list(enumerate(cos_sim_res[idx])) # 해당 영화와 나머지 영화들의 유사도를 idx와 함께 list로

sim_scores = sorted(sim_scores, key=lambda x: x[1], reverse=True) # 유사도를 기준(key)으로 내림차순 정렬

sim_scores_n = sim_scores[1:n+1] # 유사도가 동일(title==title)한 첫번째를 제외하고 n번째까지

movie_idx = [movie_dict[0] for movie_dict in sim_scores_n]

return data['title'].iloc[movie_idx]

get_recommendation('Toy Story', 5)

15348 Toy Story 3

2997 Toy Story 2

10301 The 40 Year Old Virgin

24523 Small Fry

23843 Andy Hardy's Blonde Trouble

Name: title, dtype: object

get_recommendation('Batman', 5)

8681 Scars of Dracula

18562 Johnny Cash at Folsom Prison

6600 The Prince and the Pauper

5355 Nosferatu the Vampyre

37915 Vaesen

Name: title, dtype: object

get_recommendation('The Dark Knight Rises', 5)

31143 Deadly Daycare

19286 The One Percent

44918 Once More

45106 Nicostratos the Pelican

33008 White Cannibal Queen

Name: title, dtype: object

word2vec, 임베딩

딥러닝과 잘 어울림

sklearn의 TfidfVectorizer는 머신러닝과 잘 어울림

import pandas as pd

train_data = pd.read_table('datasets/ratings.txt')

train_data.head()

| id | document | label | |

|---|---|---|---|

| 0 | 8112052 | 어릴때보고 지금다시봐도 재밌어요ㅋㅋ | 1 |

| 1 | 8132799 | 디자인을 배우는 학생으로, 외국디자이너와 그들이 일군 전통을 통해 발전해가는 문화산... | 1 |

| 2 | 4655635 | 폴리스스토리 시리즈는 1부터 뉴까지 버릴께 하나도 없음.. 최고. | 1 |

| 3 | 9251303 | 와.. 연기가 진짜 개쩔구나.. 지루할거라고 생각했는데 몰입해서 봤다.. 그래 이런... | 1 |

| 4 | 10067386 | 안개 자욱한 밤하늘에 떠 있는 초승달 같은 영화. | 1 |

train_data.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 200000 entries, 0 to 199999

Data columns (total 3 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 id 200000 non-null int64

1 document 199992 non-null object

2 label 200000 non-null int64

dtypes: int64(2), object(1)

memory usage: 4.6+ MB

train_data.dropna(inplace=True)

train_data.info()

<class 'pandas.core.frame.DataFrame'>

Int64Index: 199992 entries, 0 to 199999

Data columns (total 3 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 id 199992 non-null int64

1 document 199992 non-null object

2 label 199992 non-null int64

dtypes: int64(2), object(1)

memory usage: 6.1+ MB

# 한글 이외 제거

train_data['document'] = train_data['document'].str.replace('[^ㄱ-ㅎㅏ-ㅣ가-힣 ]', '') # ㄱ-ㅎ,ㅏ-ㅣ,가-힣,공백 제외

C:\Users\user\AppData\Local\Temp\ipykernel_14604\1359601085.py:2: FutureWarning: The default value of regex will change from True to False in a future version.

train_data['document'] = train_data['document'].str.replace('[^ㄱ-ㅎㅏ-ㅣ가-힣 ]', '') # ㄱ-ㅎ,ㅏ-ㅣ,가-힣,공백 제외

# ㄱ-ㅣ는 가능하지만 ㄱ-힣은 가능하지 않다.

print(ord('ㄱ'), ord('ㅎ'))

print(ord('ㅏ'), ord('ㅣ'))

print(ord('가'), ord('힣'))

12593 12622

12623 12643

44032 55203

from konlpy.tag import Okt

okt = Okt()

stopwords = ["의", "가", "이", "은", "는",

"들", "좀", "잘", "강", "과",

"도", "를", "으로", "자", "에",

"와", "한", "하다"]

from tqdm import tqdm

toked_data=[]

for sen in tqdm(train_data['document']):

toked_sen = okt.morphs(sen, stem=True)

toked_sen_wo_stop = [word for word in toked_sen if not word in stopwords]

toked_data.append(toked_sen_wo_stop)

100%|█████████████████████████████████████████████████████████████████████████| 199992/199992 [09:49<00:00, 339.43it/s]

# 리뷰 최대 길이

max(len(review) for review in toked_data)

72

# 리뷰 최소 길이

min(len(review) for review in toked_data)

0

# 리뷰 평균 길이

sum(len(review) for review in toked_data)/len(toked_data)

10.719648785951438

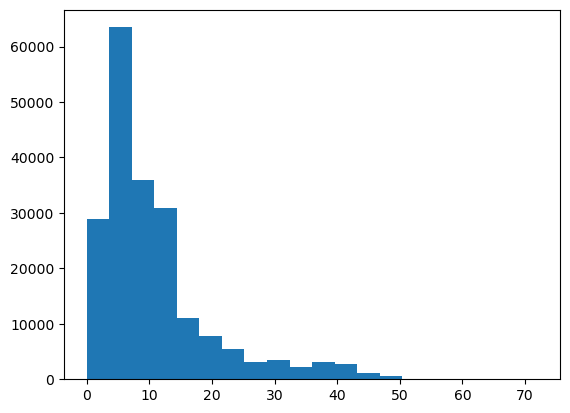

import matplotlib.pyplot as plt

# 히스토그램 그려보기

plt.hist([len(review) for review in toked_data], bins=20)

plt.show()

from gensim.models import Word2Vec

model = Word2Vec(sentences=toked_data, vector_size=100, window=5, min_count=5, workers=4, sg=0)

model.wv.vectors.shape # 임베딩 행렬(단어수, 표현력) 단어당 100차원의 특성을 저장

(16477, 100)

model.wv.vectors

array([[-2.8263861e-03, 9.8568387e-02, -9.4999635e-01, ...,

-8.6086142e-01, 1.3070868e-01, 8.3628201e-01],

[-1.3327576e+00, 5.4933226e-01, -4.0492460e-01, ...,

-9.6057999e-01, -1.1362101e+00, 6.1550405e-02],

[-1.5236272e+00, 2.4189599e-01, -1.3285520e+00, ...,

-8.9836246e-01, 2.8833647e+00, 1.5803616e-01],

...,

[-3.3974819e-02, -1.4981081e-02, 5.9175860e-02, ...,

-8.6746484e-02, 4.4356149e-02, -6.0275033e-02],

[-2.4699664e-02, 3.8863931e-02, -7.7239783e-03, ...,

-1.0443001e-01, -2.6425140e-02, -9.8955519e-03],

[-1.2799753e-02, 2.7629824e-02, -1.4718298e-03, ...,

-4.9694967e-02, 1.4989703e-02, 3.4072924e-02]], dtype=float32)

model.wv.most_similar('송혜교')

[('이종석', 0.892828643321991),

('남상미', 0.8924018740653992),

('김소연', 0.8526328206062317),

('이민정', 0.8493728041648865),

('신현준', 0.8422029614448547),

('주지훈', 0.8388743996620178),

('손예진', 0.8374811410903931),

('송윤아', 0.8355734944343567),

('서우', 0.8328922390937805),

('김동완', 0.8306312561035156)]

사전 학습된 모델

import gensim

pre_trained_word2vec = gensim.models.KeyedVectors.load_word2vec_format('datasets/GoogleNews-vectors-negative300.bin', binary=True)

pre_trained_word2vec.vectors.shape # 단어:3000000, 차원:300

(3000000, 300)

pre_trained_word2vec.similarity('this', 'is')

0.40797037

pre_trained_word2vec.similarity('food', 'book')

0.10171259

pre_trained_word2vec.similarity('chicken', 'pizza')

0.37984928

댓글남기기